Advanced Guide¶

Install Edge Insights for Industrial from source code¶

By default, EII is installed via Edge Software Hub after downloading the EII package and running command ./edgesoftware install. This is the recommended installation when you want to preview EII stack.

If you are more interested in knowing different EII configurations that could be exercised or wish to customize the EII source code, please check the below sections:

Complete the following tasks to install EII manually.

Task 1: Install Prerequisites¶

The pre_requisites.sh script automates the installation and configuration of all the prerequisites required for building and running the EII stack. The prerequisites are as follows:

docker daemon

docker client

docker-compose

Python packages

The pre-requisites.sh script performs the following:

Checks if docker and docker-compose is installed in the system. If required, it uninstalls the older version and installs the correct version of docker and docker-compose.

Configures the proxy settings for the docker client and docker daemon to connect to the internet.

Configures the proxy settings system-wide (/etc/environment) and for docker. If a system is running behind a proxy, then the script prompts users to enter the proxy address to configure the proxy settings.

Configures proxy setting for /etc/apt/apt.conf to enable apt updates and installations.

Note

The recommended version of the docker-compose is

1.29.0. In versions older than 1.29.0, the video use case docker-compose.yml files and the device_cgroup_rules command may not work.To use versions older than docker-compose 1.29.0, in the

ia_video_ingestionandia_video_analyticsservices, comment out thedevice_cgroup_rulescommand.You can comment out the

device_cgroup_rulescommand in theia_video_ingestionandia_video_analyticsservices to use versions older than 1.29.0 of docker-compose. This can result in limited inference and device support. The following code sample shows how thedevice_cgroup_rulescommands are commented out:ia_video_ingestion: ... #device_cgroup_rules: #- 'c 189:* rmw' #- 'c 209:* rmw'

After modifying the docker-compose.yml file, refer to the Using the Builder script section. Before running the services using the docker-compose up command, rerun the builder.py script.

Run the Prerequisites Script¶

To run the prerequisite script, execute the following commands:

cd [WORKDIR]/IEdgeInsights/build

sudo -E ./pre_requisites.sh --help

Usage :: sudo -E ./pre_requisites.sh [OPTION...]

List of available options...

--proxy proxies, required when the gateway/edge node running EII (or any of EII profile) is connected behind proxy

--help / -h display this help and exit

Note

If the –proxy option is not provided, then script will run without proxy. Different use cases are as follows:

Runs without proxy

sudo -E ./pre_requisites.sh

Runs with proxy

sudo -E ./pre_requisites.sh --proxy="proxy.intel.com:891"

Optional Steps¶

If required, you can enable full security for production deployments. Ensure that the host machine and docker daemon are configured per the security recommendation. For more info, see build/docker_security_recommendation.md.

If required, you can enable log rotation for docker containers using any of the following methods:

Method 1¶

Set the logging driver as part of the docker daemon. This applies to all the docker containers by default.

Configure the json-file driver as the default logging driver. For more info, see JSON File logging driver. The sample json-driver configuration that can be copied to

/etc/docker/daemon.jsonis as follows:{ "log-driver": "json-file", "log-opts": { "max-size": "10m", "max-file": "5" } }

Run the following command to reload the docker daemon:

sudo systemctl daemon-reload

Run the following command to restart docker:

sudo systemctl restart docker

Method 2¶

Set logging driver as part of docker compose which is container specific. This overwrites the first option (i.e /etc/docker/daemon.json). The following example shows how to enable the logging driver only for the video_ingestion service:

ia_video_ingestion:

...

...

logging:

driver: json-file

options:

max-size: 10m

max-file: 5

Task 2: Generate the Deployment and the Configuration Files¶

After downloading EII from the release package or git, run the commands mentioned in this section from the [WORKDIR]/IEdgeInsights/build/ directory.

Use the Builder Script¶

Note

:

To run the builder.py script, complete the prerequisite by entering the values for the following keys in build/.env:

ETCDROOT_PASSWORD – The value for this key is required, if you are using the ConfigMgrAgent and the EtcdUI services.

INFLUXDB_USERNAME, INFLUXDB_PASSWORD, MINIO_ACCESS_KEY, and MINIO_SECRETKEY – The values for these keys are required, if you are using the Data Store service. Special characters ``~:’+[/@^{%(-“*|,&<``}.=}!>;?#$)` are not allowed for the INFLUXDB_USERNAME and INFLUXDB_PASSWORD. The MINIO_ACCESS_KEY and the MINIO_SECRET_KEY length must be a minimum of 8 characters. If you enter wrong values or do not enter the values for the keys, the

builder.pyscript prompts for corrections or values.PKG_SRC - The value will be pre-populated with the local http server daemon which is brought up by the

./edgesoftware installcommand when installed from Edge Software Hub. By default, the EII core libs and other artifacts would be picked up from$HOME/edge_insights_industrial/Edge_Insights_for_Industrial_<version>/CoreLibsdirectory.

To use the builder.py script, run the following command:

python3 builder.py -h

usage: builder.py [-h] [-f YML_FILE] [-v VIDEO_PIPELINE_INSTANCES]

[-d OVERRIDE_DIRECTORY] [-s STANDALONE_MODE] [-r REMOTE_DEPLOYMENT_MODE]

optional arguments:

-h, --help show this help message and exit

-f YML_FILE, --yml_file YML_FILE

Optional config file for list of services to include.

Eg: python3 builder.py -f video-streaming.yml (default: None)

-v VIDEO_PIPELINE_INSTANCES, --video_pipeline_instances VIDEO_PIPELINE_INSTANCES

Optional number of video pipeline instances to be

created.

Eg: python3 builder.py -v 6 (default: 1)

-d OVERRIDE_DIRECTORY, --override_directory OVERRIDE_DIRECTORY

Optional directory consisting of benchmarking

configs to be present in each app directory.

Eg: python3 builder.py -d benchmarking (default: None)

-s STANDALONE_MODE, --standalone_mode STANDALONE_MODE

Standalone mode brings in changes to support independently

deployable services.

Eg: python3 builder.py -s True (default: False)

-r REMOTE_DEPLOYMENT_MODE, --remote_deployment_mode REMOTE_DEPLOYMENT_MODE

Remote deployment mode brings in changes to support remote deployment

wherein builder does not auto-populate absolute paths of build

related variables in the generated docker-compose.yml

Eg: python3 builder.py -r True (default: False)

Generate Consolidated Files for All Applicable Services of Edge Insights for Industrial¶

Using the Builder tool, EII auto-generates the configuration files that are required for deploying the EII services on a single node or multiple nodes. The Builder tool auto-generates the consolidated files by getting the relevant files from the EII service directories that are required for different EII use-cases. The Builder tool parses the top-level directories excluding VideoIngestion and VideoAnalytics under the IEdgeInsights directory to generate the consolidated files. The VideoIngestion and VideoAnalytics are excluded since we will be using EdgeVideoAnalyticsMicroservice as the default primary analytics pipeline moving forward.

The following table shows the list of consolidated files and their details:

Table: Consolidated files

File Name |

Description |

|---|---|

docker-compose.yml |

Consolidated |

docker-compose.override.yml |

Consolidated |

eii_config.json |

Consolidated |

values.yaml |

Consolidated |

Template yaml files |

Files copied from the helm/templates directory of every app to the helm-eii/eii-deploy/templates directory that is required to deploy EII services via helm. |

Note

If you modify an individual EII app or service directory file, then ensure to rerun the

builder.pyscript before running the EII stack to regenerate the updated consolidated files.Manual editing of consolidated files is not recommended. Instead modify the respective files in the EII app or service directories and use the

builder.pyscript to generate the consolidated files.Enter the secret credentials in the

# Service credentialssection of the .env([WORK_DIR]/IEdgeInsights/build/.env) file if you are trying to run that EII app/service. If the required credentials are not present, thebuilder.pyscript would be prompting until all the required credentials are entered. Apply a file access mask to protect the .env([WORK_DIR]/IEdgeInsights/build/.env) file from being read by unauthorized users.The builder_config.json(

[WORK_DIR]/IEdgeInsights/build/builder_config.json) is the config file for thebuilder.pyscript and it contains the following keys:subscriber_list: This key contains a list of services that act as a subscriber to the stream being published.publisher_list: This key contains a list of services that publishes a stream of data.include_services: This key contains the mandatory list of services. These services should be included when the Builder is run without the-fflag.exclude_services: This key contains the mandatory list of services. These services should be excluded when the Builder is run without the-fflag.increment_rtsp_port: This is a Boolean key. It increments the port number for the RTSP stream pipelines.

To generate the consolidated files, run the following command:

python3 builder.py

Generate Consolidated Files for a Subset of Edge Insights for Industrial Services¶

Builder uses a yml file for configuration. The config yml file consists of a list of services to include. You can mention the service name as the path relative to IEdgeInsights or full path to the service in the config yml file.

To include only a certain number of services in the EII stack, you can add the -f or yml_file flag of builder.py. You can find the examples of yml files for different use cases as follows:

Azure(

[WORK_DIR]/IEdgeInsights/build/usecases/video-streaming-azure.yml)The following example shows running Builder with the -f flag:

python3 builder.py -f usecases/video-streaming.yml

Main Use Cases

Use case |

yaml file |

|---|---|

Video + Time Series |

build/usecases/video-timeseries.yml( |

Video |

build/usecases/video.yml( |

Time Series |

build/usecases/time-series.yml( |

Video Pipeline Sub Use Cases

Use case |

yaml file |

|---|---|

Video streaming |

build/usecases/video-streaming.yml( |

Video streaming with EVAM |

build/usecases/video-streaming-evam.yml( |

Video streaming and historical |

build/usecases/video-streaming-storage.yml( |

Video streaming with AzureBridge |

build/usecases/video-streaming-azure.yml( |

Video streaming and custom udfs |

build/usecases/video-streaming-all-udfs.yml( |

When you run the multi-instance config, a build/multi_instance directory is created in the build directory. Based on the number of video_pipeline_instances specified, that many directories of EdgeVideoAnalyticsMicroservice are created in the build/multi_instance directory.

The following section provides an example for running the Builder to generate the multi-instance boiler plate config for 3 streams of video-streaming use case.

Generate Multi-instance Config Using the Builder¶

If required, you can generate the multi-instance docker-compose.yml and config.json files using the Builder. You can use the -v or video_pipeline_instances flag of the Builder to generate boiler plate config for the multiple-stream use cases. The -v or video_pipeline_instances flag creates the multi-stream boiler plate config for the docker-compose.yml and eii_config.json files.

The following example shows running builder to generate the multi-instance boiler plate config for 3 streams of video-streaming use case:

python3 builder.py -v 3 -f usecases/video-streaming-evam.yml

Using the previous command for 3 instances, the build/multi_instance directory consists of the following directories

EdgeVideoAnalyticsMicroservice1

EdgeVideoAnalyticsMicroservice2

EdgeVideoAnalyticsMicroservice3

Initially each directory will have the default config.json and the docker-compose.yml files that are present within the EdgeVideoAnalyticsMicroservice/eii directory.

./build/multi_instance/

|-- EdgeVideoAnalyticsMicroservice1

| |-- config.json

| `-- docker-compose.yml

|-- EdgeVideoAnalyticsMicroservice2

| |-- config.json

| `-- docker-compose.yml

|-- EdgeVideoAnalyticsMicroservice3

| |-- config.json

| `-- docker-compose.yml

You can edit the config of each of these streams within the ``build/multi_instance`` directory. To generate the consolidated ``docker compose`` and ``eii_config.json`` file, rerun the ``builder.py`` command.

Note

The multi-instance feature support of Builder works only for the video pipeline that is the usecases/video-streaming.yml and video-streaming-evam.yml use case and not with any other use case yml files like usecases/video-streaming-storage.yml and so on. Also, it doesn’t work for cases without the

-fswitch. The previous example will work with any positive number for-v. To learn more about using the multi-instance feature with the DiscoverHistory tool, see Multi-instance feature support for the builder script with the DiscoverHistory tool.If you are running the multi-instance config for the first time, it is recommended not to change the default

config.jsonfile and thedocker-compose.ymlfile in theEdgeVideoAnalyticsMicroservice/eiidirectory.If you are not running the multi-instance config for the first time, the existing

config.jsonanddocker-compose.ymlfiles in thebuild/multi_instancedirectory will be used to generate the consolidatedeii-config.jsonanddocker-composefiles.The

docker-compose.ymlfiles present within thebuild/multi_instancedirectory will have the following:the updated service_name, container_name, hostname, AppName, ports and secrets for that respective instance.

The

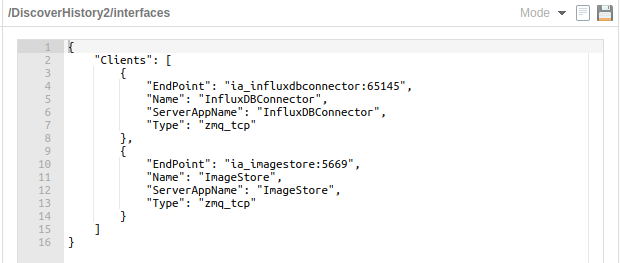

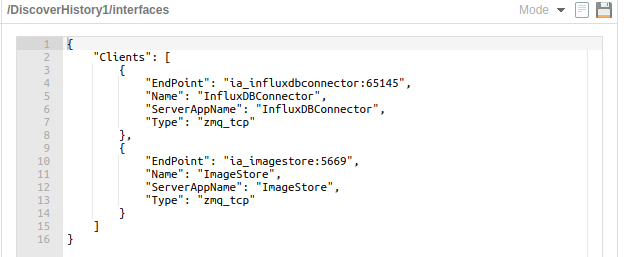

config.json filein thebuild/multi_instancedirectory will have the following:the updated Name, Type, Topics, Endpoint, PublisherAppname, ServerAppName, and AllowedClients for the interfaces section.

the incremented RTSP port number for the config section of that respective instance.

Ensure that all containers are down before running the multi-instance configuration. Run the

docker-compose downcommand before running thebuilder.pyscript for the multi-instance configuration.It is recommended to use either EdgeVideoAnalyticsMicroservice or VideoIngestion and VideoAnalytics and not both in the usecase yml files.

Generate Benchmarking Config Using Builder¶

To provide a different set of docker-compose.yml and config.json files than those found in each service directory, use the -d or the override directory flag. The -d flag instructs the program to look in the specified directory for the necessary set of files.

For example, to pick files from a directory named benchmarking, you can run the following command:

python3 builder.py -d benchmarking

Note

If you use the override directory feature of the builder then include all the 3 files mentioned in the previous example. If you do not include a file in the override directory, then the Builder will omit that service in the final config that is generated.

Adding the

AppNameof the subscriber container or client container in thesubscriber_list of builder_config.jsonallows you to spawn a single subscriber container or client container that is subscribing or receiving on multiple publishers or server containers.Multiple containers specified by the

-vflag is spawned for services that are not mentioned in thesubscriber_list. For example, if you run Builder with–v 3option andVisualizeris not added in thesubscriber_listofbuilder_config.jsonthen 3 instances of Visualizer are spawned. Each instance subscribes to 3 VideoAnalytics services. If Visualizer is added in thesubscriber_listofbuilder_config.json, a single Visualizer instance subscribing to 3 multiple VideoAnalytics is spawned.

Task 3: Build the Edge Insights for Industrial Stack¶

Note

For running the EII services in the IPC mode, ensure that the same user is mentioned in the publisher services and subscriber services.

If the publisher service is running as root, then the subscriber service should also run as root. For example, in the

docker-compose.ymlfile, if you have specifieduser: ${EII_UID}in the publisher service, then specify the sameuser: ${EII_UID}in the subscriber service. If you have not specified a user in the publisher service, then don’t specify the user in the subscriber service.If services need to be running in multiple nodes in the TCP mode of communication, msgbus subscribers, and clients of

AppNameare required to configure theEndPointinconfig.jsonwith theHOST_IPand thePORTunderSubscribers/PublishersorClients/Serversinterfaces section.Ensure that the port is being exposed in the

docker-compose.ymlof the respectiveAppName. For example, if the"EndPoint": <HOST_IP>:65012is configured in theconfig.jsonfile, then expose the port65012in thedocker-compose.ymlfile of theia_video_ingestionservice.

ia_edge_video_analytics_microservice:

...

ports:

- 65012:65012

Run all the following EII build and commands from the [WORKDIR]/IEdgeInsights/build/ directory.

EII supports the following use cases to run the services mentioned in the docker_compose.yml file. Refer to the Task 2 to generate the docker_compose.yml file for a specific use case. For more information and configuration, refer to the [WORK_DIR]/IEdgeInsights/README.md file.

Note

This is an optional step, if you want to use the EII pre-built container images and not build from source. For more details, refer to List of Distributed EII Services

Run the following command to build all EII services in the build/docker-compose.yml along with the base EII services.

docker-compose build

If any of the services fails during the build, then run the following command to build the service again:

docker-compose build --no-cache <service name>

Task 4: Deploy EII Services¶

Docker compose Deployment¶

This deployment primarily supports single node deployment

Independent building and deployment of services¶

All the EII services are aligning with the Microservice architecture principles of being Independently buildable and deployable.

Independently buildable and deployable feature is useful in allowing users to pick and choose only one service to build or deploy.

If one wants to run two or more microservices, we recommend to use the use-case driven approach as mentioned in Generate Consolidated Files for a Subset of Edge Insights for Industrial Services.

- The Independently buildable and deployable feature allows the users to build the individual service at the directory level and also allows the users to deploy the service in either of the two ways:

Without ConfigMgrAgent dependency:

- Deployment without ConfigMgrAgent dependency is only available in DEV mode where we make use of the ConfigMgr library config file APIs, by setting the `READ_CONFIG_FROM_FILE_ENV` value to `true` in the .env(`[WORK_DIR]/IEdgeInsights/build/.env`) file.

NOTE: We recommend the users to follow this simpler docker-compose deployment approach while adding in new services or debugging the existing service.

With ConfigMgrAgent dependency:

- Deployment with ConfigMgrAgent dependency is available in both DEV and PROD mode where we set the `READ_CONFIG_FROM_FILE_ENV` value to `false` in the .env(`[WORK_DIR]/IEdgeInsights/build/.env`) file and make use of the ConfigMgrAgent(`[WORK_DIR]/IEdgeInsights/ConfigMgrAgent/docker-compose.yml`) and the builder.py(`[WORK_DIR]/IEdgeInsights/build/builder.py`) to deploy the service.

NOTE: We recommend the users to follow the earlier use-case driven approach mentioned in Generate Consolidated Files for a Subset of Edge Insights for Industrial Services, when they want to deploy more than one microservice.

Run Edge Insights for Industrial Services¶

Note

Ensure to run docker-compose down from the build([WORK_DIR]/IEdgeInsights/build) directory before you bring up the EII stack. This helps to remove running containers and avoid any sync issues where other services have come up before ia_configmgr_agent container has completed the provisioning step.

If the images tagged with the EII_VERSION label, as in the build/.env([WORK_DIR]/IEdgeInsights/build/.env) do not exist locally in the system but are available in the Docker Hub, then the images will be pulled during the docker-compose upcommand.

Provision Edge Insights for Industrial¶

The EII provisioning is taken care by the ia_configmgr_agent service that gets launched as part of the EII stack. For more details on the ConfigMgr Agent component, refer to the Readme.

Start Edge Insights for Industrial in Dev Mode¶

Note

By default, EII is provisioned in the secure mode.

It is recommended not to use EII in the Dev mode in a production environment. In the Dev mode, all security features, communication to and from the etcd server over the gRPC protocol, and the communication between the EII services/apps over the ZMQ protocol are disabled.

By default, the EII empty certificates folder Certificates(

[WORK_DIR]/IEdgeInsights/Certificates]) will be created in the DEV mode. This happens because of docker bind mounts but it is not an issue.The

EII_INSTALL_PATHin the build/.env([WORK_DIR]/IEdgeInsights/build/.env) remains protected both in the DEV and the PROD mode with the Linux group permissions.

Starting EII in the Dev mode eases the development phase for System Integrators (SI). In the Dev mode, all components communicate over non-encrypted channels. To enable the Dev mode, set the environment variable DEV_MODE to true in the [WORK_DIR]/IEdgeInsights/build/.env file. The default value of this variable is false.

To provision EII in the developer mode, complete the following steps:

Update

DEV_MODE=truein[WORK_DIR]/IEdgeInsights/build/.env.Rerun the

build/builder.pyto regenerate the consolidated files.

Start Edge Insights for Industrial in Profiling Mode¶

The Profiling mode is used for collecting the performance statistics in EII. In this mode, each EII component makes a record of the time needed for processing any single frame. These statistics are collected in the visualizer where System Integrators (SIs) can see the end-to-end processing time and the end-to-end average time for individual frames.

To enable the Profiling mode, in the [WORK_DIR]/IEdgeInsights/build/.env file, set the environment variable PROFILING to true.

Run Provisioning Service and Rest of the Edge Insights for Industrial Stack Services¶

Note

After the EII services starts, you can use the Etcd UI web interface to make the changes to the EII service configs or interfaces keys.

in the DEV and the PROD mode, if the EII services come before the Config Manager Agent service, then they would be in the restarting mode with error logs such as

Config Manager initialization failed.... This is due to the single step deployment to support the independent deployment of the EII services, where services can come in a random order and start working when the dependent service comes up later. In one to two minutes, all the EII services should show the status asrunningwhenConfig Manager Agentservice starts up.To build the common libs and generate needed artifacts from source and use it for building the EII services, refer common/README.md.

docker-compose up -d

On successful run, you can open the web visualizer in the Chrome browser at https://<HOST_IP>:3000. The HOST_IP corresponds to the IP of the system on which the visualization service is running.

Kubernetes Deployment¶

This deployment primarily supports multi-node cluster deployment

With K8s Orchestrator¶

You can use any of the following options to deploy EII on a multi-node cluster:

[

Recommended] For deploying through ansible playbook on multiple nodes automatically, refer to build/ansible/README.mdFor information about using helm charts to provision the node and deploy the EII services, refer to build/helm-eii/README.md

Azure Manifest Deployment¶

For more details refer to Azure Deployment

List of EII Services¶

Based on requirement, you can include or exclude the following EII services in the [WORKDIR]/IEdgeInsights/build/docker-compose.yml file:

Provisioning Service - This service is a prerequisite and cannot be excluded from the

docker-compose.ymlfile.Common EII services for Video and Timeseries Analytics pipeline services

OpcuaExport - Optional service to read from the VideoAnalytics container to publish data to opcua clients.

RestDataExport - Optional service to read the metadata and the image blob from the from the Data store service.

Video Analytics pipeline services

FactoryControlApp - Optional service to read from the VideoAnalytics container if you want to control the light based on the defective or non-defective data

Timeseries Analytics pipeline services

Adding New Services to EII Stack¶

This section provides information about adding a service, subscribing to the EdgeVideoAnalyticsMicroservice([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice), and publishing it on a new port.

Add a service to the EII stack as a new directory in the IEdgeInsights([WORK_DIR]/IEdgeInsights/) directory. The Builder registers and runs any service present in its own directory in the IEdgeInsights([WORK_DIR]/IEdgeInsights/) directory. The directory should contain the following:

A

docker-compose.ymlfile to deploy the service as a docker container. TheAppNameis present in theenvironmentsection in thedocker-compose.ymlfile. Before adding theAppNameto the mainbuild/eii_config.json, it is appended to theconfigandinterfacesas/AppName/configand/AppName/interfaces.A

config.jsonfile that contains the required config for the service to run after it is deployed. Theconfig.jsonconsists of the following:A

configsection, which includes the configuration-related parameters that are required to run the application.An

interfacessection, which includes the configuration of how the service interacts with other services of the EII stack.

Note

For more information on adding new EII services, refer to the EII sample apps at Samples written in C++, Python, and Golang using the EII core libraries.

The following example shows:

How to write the config.json for any new service

Subscribe to EdgeVideoAnalyticsMicroservice

Publish on a new port

{

"config": {

"paramOne": "Value",

"paramTwo": [1, 2, 3],

"paramThree": 4000,

"paramFour": true

},

"interfaces": {

"Subscribers": [

{

"Name": "default",

"Type": "zmq_tcp",

"EndPoint": "127.0.0.1:65114",

"PublisherAppName": "EdgeVideoAnalyticsMicroservice",

"Topics": [

"edge_video_analytics_results"

]

}

],

"Publishers": [

{

"Name": "default",

"Type": "zmq_tcp",

"EndPoint": "127.0.0.1:65113",

"Topics": [

"publish_stream"

],

"AllowedClients": [

"ClientOne",

"ClientTwo",

"ClientThree"

]

}

]

}

}

The config.json file consists of the following key and values:

value of the

configkey is the config required by the service to run.value of the

interfaceskey is the config required by the service to interact with other services of EII stack over the Message Bus.the

Subscribersvalue in theinterfacessection denotes that this service should act as a subscriber to the stream being published by the value specified byPublisherAppNameon the endpoint mentioned in value specified byEndPointon topics specified in value ofTopickey.the

Publishersvalue in theinterfacessection denotes that this service publishes a stream of data after obtaining and processing it fromEdgeVideoAnalyticsMicroservice. The stream is published on the endpoint mentioned in value ofEndPointkey on topics mentioned in the value ofTopicskey.the services mentioned in the value of

AllowedClientsare the only clients that can subscribe to the published stream, if it is published securely over the Message Bus.

Note

Like the interface keys, EII services can also have

ServersandClientsinterface keys. For more information, refer to config.json([WORK_DIR]/IEdgeInsights/VideoIngestion/config.json) of theVideoIngestionservice and config.json([WORK_DIR]/IEdgeInsights/tools/SWTriggerUtility/config.json) of the SWTriggerUtility tool.For more information on the

interfaceskey responsible for the Message Bus endpoint configuration, refer to common/libs/ConfigMgr/README.md#interfaces.For the etcd secrets configuration, in the new EII service or app

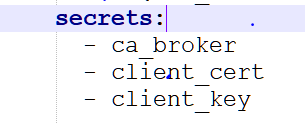

docker-compose.ymlfile, add the following volume mounts with the rightAppNameenv value:

...

volumes:

- ./Certificates/[AppName]:/run/secrets/[AppName]:ro

- ./Certificates/rootca/cacert.pem:/run/secrets/rootca/cacert.pem:ro

DataStore Microservice¶

Data Store microservice provides on-prem persistent metadata stored in the InfluxDB* platform and Binary Large Object (BLOB) data stored in the MinIO* system. These storage solutions are of NoSQL nature and support video analytics and time-series analytics data store operations at the edge.

The microservice supports the following data storage types:

BLOBs: these are files in containers as BLOBs of data.

NoSQL data.

The DataStore microservice consists of the following databases:

DataStore Configuration¶

The configurations for Datastore Service are added in etcd. The configuration details are available in the docker-compose file, under AppName in the environment section of the app’s service definition.

For the scenario, when the AppName is Datastore, the following example shows how the app’s config will look for /DataStore/config key in etcd:

"dbs": {

"influxdb": {

"topics": ["*"],

"server": "datastore_influxdb_server",

"retention": "1h30m5s",

"dbname": "datain",

"ssl": "True",

"verifySsl": "False",

"port": "8086",

"pubWorkers": "5",

"subWorkers": "5",

"ignoreKeys": [ "defects" ],

"tagKeys": []

},

"miniodb": {

"topics": ["camera1_stream_results"],

"server": "datastore_minio_server",

"retentionTime": "1h",

"retentionPollInterval": "60s",

"ssl": "false"

}

}

By default, both the DBs will be enabled. If you want to disable any of the above DBs, remove the corresponding key and its value from the config.

For Example, if you are not using MinIO DB, you can disable the same and modify the config as below:

"dbs": {

"influxdb": {

"topics": ["*"],

"server": "datastore_influxdb_server",

"retention": "1h30m5s",

"dbname": "datain",

"ssl": "True",

"verifySsl": "False",

"port": "8086",

"pubWorkers": "5",

"subWorkers": "5",

"ignoreKeys": [ "defects" ],

"tagKeys": []

}

}

The following are the details of the keys in the above config:

The

topicskey determines which messages are to be processed by the corresponding DB microservice. Only the messages with a topic listed intopicskey are processed by the individual module. Iftopicscontain\*, then all the messages are processed.The

serverkey specifies the name of the server interface on which, the corresponding module server is active

InfluxDB¶

- DataStore will subscribe to the InfluxDB and start the zmq

publisher, zmq subscriber threads, and zmq request reply thread based on PubTopics, SubTopics and QueryTopics configuration.

- zmq subscriber thread connects to the PUB socket of zmq bus on which

the data is published by VideoAnalytics and push it to the InfluxDB.

- zmq publisher thread will publish the point data ingested by the telegraf

and the classifier result coming out of the point data analytics.

- zmq reply request service will receive the InfluxDB select query and

response with the historical data.

For nested json data, by default, DataStore will flatten the nested json and push the flat data to InfluxDB to avoid the flattening of any particular nested key mention the tag key in the config.json(``[WORK_DIR]/IEdgeInsights/DataStore/config.json``) file. Currently the defects key is ignored from flattening. Every key to be ignored has to be in a new line.

For example,

ignore_keys = [ "Key1", "Key2", "Key3" ]

By default, all the keys in the data schema will be pushed to InfluxDB as fields. If tags are present in data schema, it can be mentioned in the config.json(``[WORK_DIR]/IEdgeInsights/DataStore/config.json``) file then the data pushed to InfluxDB, will have fields and tags both. At present, no tags are visible in the data scheme and tag_keys are kept blank in the config file.

For Example,

tag_keys = [ "Tag1", "Tag2" ]

MinIO¶

The MinIO DB submodule primarily subscribes to the stream that comes out of the VideoAnalytics app via MessageBus and stores the frame into minio for historical analysis.

The high-level logical flow of MinIO DB is as follows:

The messagebus subscriber in MinIO DB will subscribe to the VideoAnalytics published classified result (metadata, frame) on the messagebus. The img_handle is extracted out of the metadata and is used as the key and the frame is stored as a value for that key in minio persistent storage.

For a historical analysis of the stored classified images, MinIO DB starts the messagebus server which provides the read and store interfaces. The payload format is as follows for:

Store Interface:

Request: map ("command": "store","img_handle":"$handle_name"),[]byte($binaryImage) Response : map ("img_handle":"$handle_name", "error":"$error_msg") ("error" is optional and available only in case of error in execution.) b. Read Interface:

Request : map ("command": "read", "img_handle":"$handle_name") Response : map ("img_handle":"$handle_name", "error":"$error_msg"),[]byte($binaryImage) ("error" is optional and available only in case of error in execution. And $binaryImage is available only in case of successful read)

Detailed Description on Each Keys¶

accessKey |Username required to access Minio DB |Any suitable value|Required|

secretKey|Password required to access Minio DB| Any suitable value| Required

retentionTime|The retention parameter specifies the retention policy to apply for the images stored in Minio DB. In case of infinite retention time, set it to “-1”| Suitable duration string value as mentioned at https://golang.org/pkg/time/#ParseDuration.| Required

retentionPollInterval|Used to set the time interval for checking images for expiration. Expired images will become candidates for deletion and no longer retained. In case of infinite retention time, this attribute will be ignored| Suitable duration string value as mentioned at https://golang.org/pkg/time/#ParseDuration| Required

ssl|If “true”, establishes a secure connection with Minio DB else a non-secure connection| “true” or “false”| Required

Steps to Independently Build and Deploy DataStore Microservice¶

Note

For running two or more microservices, you are recommended to try the use case-driven approach for building and deploying as described at Generate Consolidated Files for a Subset of Edge Insights for Industrial Services

Steps to Independently Build the DataStore Microservice¶

Note

When switching between independent deployment of the service with and without config manager agent service dependency, one would run into issues with docker-compose build w.r.t Certificates folder existence. As a workaround, run the command sudo rm -rf Certificates to proceed with the docker-compose build.

To independently build the DataStore microservice, complete the following steps:

The downloaded source code should have a directory named DataStore:

cd IEdgeInsights/DataStore

Copy the IEdgeInsights/build/.env file using the following command in the current folder

cp ../build/.env .

NOTE: Update the HOST_IP and ETCD_HOST variables in the .env file with your system IP.

# Source the .env using the following command: set -a && source .env && set +a

Independently build

docker-compose build

Steps to Independently Deploy DataStore Microservice¶

You can deploy the DataStore service in either of the following two ways:

Deploy DataStore Service without Config Manager Agent Dependency¶

Run the following commands to deploy DataStore service without Config Manager Agent dependency:

# Enter the DataStore directory

cd IEdgeInsights/DataStore

Copy the IEdgeInsights/build/.env file using the following command in the current folder, if not already present.

cp ../build/.env .Note: Ensure that

docker psis clean anddocker network lsmust not have EII bridge network.

Update .env file for the following:

1. HOST_IP and ETCD_HOST variables with your system IP.

2. `READ_CONFIG_FROM_FILE_ENV` value to `true` and `DEV_MODE` value to `true`.

3. Set the values for 'INFLUXDB_USERNAME', 'INFLUXDB_PASSWORD', 'MINIO_ACCESS_KEY', 'MINIO_SECRET_KEY' which are InfluxDB and Minio DB credentials

Source the .env using the following command:

set -a && source .env && set +a

Set Write Permission for Data Dir(Volume Mount paths): This is required for Database Server to have write permission to the respective storage paths.

sudo mkdir -p $EII_INSTALL_PATH/data

sudo chmod 777 $EII_INSTALL_PATH/data

sudo chown -R eiiuser:eiiuser $EII_INSTALL_PATH/data

# Run the service

docker-compose -f docker-compose.yml -f docker-compose-dev.override.yml up -d

Note

Datastore container restarts automatically when its config is modified in the config.json file.

If you update the config.json file by using vi or vim editor, it is required to append the set backupcopy=yes in ~/.vimrc so that the changes done on the host machine config.json are reflected inside the container mount point.

Deploy DataStore Service with Config Manager Agent Dependency¶

Run the following commands to deploy the DataStore Service with Config Manager Agent dependency:

Note

Ensure that the Config Manager Agent image present in the system. If not, build the Config Manager Agent locally when independently deploying the service with Config Manager Agent dependency.

# Enter the DataStore directory

cd IEdgeInsights/DataStore

Copy the IEdgeInsights/build/.env file using the following command in the current folder, if not already present.

cp ../build/.env .Note: Ensure that

docker psis clean anddocker network lsdoesn’t have EII bridge networks.

Update .env file for following:

1. HOST_IP and ETCD_HOST variables with your system IP.

2. `READ_CONFIG_FROM_FILE_ENV` value is set to `false`.

3. Set the values for 'INFLUXDB_USERNAME', 'INFLUXDB_PASSWORD', 'MINIO_ACCESS_KEY', 'MINIO_SECRET_KEY' which are InfluxDB and Minio DB credentials

Copy the docker-compose.yml from IEdgeInsights/ConfigMgrAgent as docker-compose.override.yml in IEdgeInsights/DataStore.

cp ../ConfigMgrAgent/docker-compose.yml docker-compose.override.yml

Copy the builder.py with standalone mode changes from IEdgeInsights/build directory

cp ../build/builder.py .Run the builder.py in standalone mode, this will generate eii_config.json and update docker-compose.override.yml

python3 builder.py -s trueBuilding the service (This step is optional for building the service if not already done in the

Independently buildablestep above)docker-compose buildFor running the service in PROD mode, run the below command:

NOTE: Make sure to update

DEV_MODEtofalsein .env while running in PROD mode and source the .env using the commandset -a && source .env && set +a.docker-compose up -dFor running the service in DEV mode, run the below command:

NOTE: Make sure to update

DEV_MODEtotruein .env while running in DEV mode and source the .env using the commandset -a && source .env && set +a.docker-compose -f docker-compose.yml -f docker-compose-dev.override.yml -f docker-compose.override.yml up -d

Video Pipeline Analytics¶

Edge Video Analytics Microservice for Edge insights for Industrial (EII) Overview¶

The Edge Video Analytics Microservice (EVAM) combines video ingestion and analytics capabilities provided by Edge insights for Industrial (EII) visual ingestion and analytics modules. This directory provides the Intel® Deep Learning Streamer (Intel® DL Streamer) pipelines to perform object detection on an input URI source and send the ingested frames and inference results using the MsgBus Publisher. It also provides a Docker compose and config file to use EVAM with the Edge insights software stack.

Prerequisites¶

As a prerequisite for using EVAM in EII mode, download EII 4.0.0 package from EII 4.0.0 package from ESH and complete the following steps:

EII when downloaded from ESH would be available at the installed location

cd [EII installed location]/IEdgeInsights

Complete the prerequisite for provisioning the EII stack by referring to the README.md.

Download the required model files to be used for the pipeline mentioned in the config(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file by completing step 2 to step 4 as mentioned in the README. ..Note: The model files are large and hence they are not part of the repo.

Run the following commands to set the environment, build the

ia_configmgr_agentcontainer and copy models to the required directory:Go to the

builddirectory:

cd [WORK_DIR]/IEdgeInsights/build

Configure visualizer app’s subscriber interfaces. Example: Add the following

interfaceskey value inVisualizer/multimodal-data-visualization-streaming/eii/config.jsonandVisualizer/multimodal-data-visualization/eii/config.jsonfiles.

"interfaces": { "Subscribers": [ { "Name": "default", "Type": "zmq_tcp", "zmq_recv_hwm": 50, "EndPoint": "ia_edge_video_analytics_microservice:65114", "PublisherAppName": "EdgeVideoAnalyticsMicroservice", "Topics": [ "edge_video_analytics_results" ] } ] }

Execute the builder.py script

python3 builder.py -f usecases/video-streaming-evam.yml

Create some necessary items for the service

sudo mkdir -p /opt/intel/eii/models/

Copy the downloaded model files to /opt/intel/eii

sudo cp -r [downloaded_model_directory]/models /opt/intel/eii/

Run the Containers¶

To pull the prebuilt EII container images and EVAM from Docker Hub and run the containers in the detached mode, run the following command:

# Launch the EII stack

docker-compose up -d

Note

The prebuilt container image for the Edge Video Analytics Microservice gets downloaded when you run the

docker-compose up -dcommand, if the image is not already present on the host system.The ETCD watch capability is enabled for Edge Video Analytics Microservice and it will restart when config/interface changes are done via the EtcdUI interface. While changing the pipeline/pipeline_version, make sure they are volume mounted to the container.

Configuration¶

See the edge-video-analytics-microservice/eii/config.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file for the configuration of EVAM. The default configuration will start the

object_detection demo for EII.

The config file is divided into two sections as follows:

Config¶

The following table describes the attributes that are supported in the config section.

Parameter |

Description |

|---|---|

|

Type of EII certs to be created. This should be |

|

Source of the frames. This should be |

|

The parameters for the source element. The provided object is the typical parameters. |

|

The name of the DL Streamer pipeline to use. This should correspond to a directory in the pipelines directory). |

|

The version of the pipeline to use. This typically is a subdirectory of a pipeline in the pipelines directory. |

|

The Boolean flag for whether to publish the metadata and the analyzed frame, or just the metadata. |

|

This provides the parameters for the model used for inference. |

Interfaces¶

Currently in the EII mode, EVAM supports launching a single pipeline and publishing on a single topic. This implies that in the configuration file (“config.json”), the single JSON object in the Publisher list is where the configuration resides for the published data. For more details on the structure, refer to the EII documentation.

EVAM also supports subscribing and publishing messages or frames using the Message Bus. The endpoint details for the EII service you need to subscribe from are to be provided in the Subscribers section in the config([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file and the endpoints where you need to publish to are to be provided in Publishers section in the config([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file.

To enable injection of frames into the GStreamer pipeline obtained from Message Bus, ensure to make the following changes:

The source parameter in the config(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file is set to msgbus. Refer to the following code snippet:"config": { "source": "msgbus" }

The template of respective pipeline is set to appsrc as source instead of uridecodebin. Refer to the following code snippet:

{ "type": "GStreamer", "template": ["appsrc name=source", " ! rawvideoparse", " ! appsink name=destination" ] }

Steps to Independently Build and Deploy EdgeVideoAnalyticsMicroservice Service¶

Note

For running two or more microservices, we recommend users to try the use case-driven approach for building and deploying as mentioned in Generate Consolidated Files for a Subset of Edge Insights for Industrial Services

Steps to Independently Build EdgeVideoAnalyticsMicroservice Service¶

Note

When switching between independent deployment of the service with and without config manager agent service dependency, one would run into issues with docker-compose build w.r.t Certificates folder existence. As a workaround, please run command sudo rm -rf Certificates to proceed with docker-compose build.

To independently build EdgeVideoAnalyticsMicroservice service, complete the following steps:

The downloaded source code should have a directory named EdgeVideoAnalyticsMicroservice/eii:

cd IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii

Copy the IEdgeInsights/build/.env file using the following command in the current folder

cp ../../build/.env .

NOTE: Update the HOST_IP and ETCD_HOST variables in the .env file with your system IP.

# Source the .env using the following command: set -a && source .env && set +a

Independently build

docker-compose build

Steps to Independently Deploy EdgeVideoAnalyticsMicroservice Service¶

You can deploy the EdgeVideoAnalyticsMicroservice service in any of the following two ways:

Deploy EdgeVideoAnalyticsMicroservice Service without Config Manager Agent Dependency¶

Run the following commands to deploy EdgeVideoAnalyticsMicroservice service without Config Manager Agent dependency:

# Enter the eii directory

cd IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii

Copy the IEdgeInsights/build/.env file using the following command in the current folder, if not already present.

cp ../../build/.env .Note: Ensure that

docker psis clean anddocker network lsmust not have EII bridge network.

Update .env file for the following:

1. HOST_IP and ETCD_HOST variables with your system IP.

2. `READ_CONFIG_FROM_FILE_ENV` value to `true` and `DEV_MODE` value to `true`.

Source the .env using the following command:

set -a && source .env && set +a

# Run the service

docker-compose -f docker-compose.yml -f docker-compose-dev.override.yml up -d

Deploy EdgeVideoAnalyticsMicroservice Service with Config Manager Agent Dependency¶

Run the following commands to deploy EdgeVideoAnalyticsMicroservice service with Config Manager Agent dependency:

Note: Ensure that the Config Manager Agent image present in the system. If not, build the Config Manager Agent locally when independently deploying the service with Config Manager Agent dependency.

# Enter the eii directory

cd IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii

Copy the IEdgeInsights/build/.env file using the following command in the current folder, if not already present.

cp ../../build/.env .Note: Ensure that

docker psis clean anddocker network lsdoesn’t have EII bridge networks.

Update .env file for following:

1. HOST_IP and ETCD_HOST variables with your system IP.

2. `READ_CONFIG_FROM_FILE_ENV` value is set to `false`.

Copy the docker-compose.yml from IEdgeInsights/ConfigMgrAgent as docker-compose.override.yml

cp ../../ConfigMgrAgent/docker-compose.yml docker-compose.override.yml

Copy the builder.py with standalone mode changes from IEdgeInsights/build directory

cp ../../build/builder.py .Run the builder.py in standalone mode, this will generate eii_config.json and update docker-compose.override.yml

python3 builder.py -s trueBuilding the service (This step is optional for building the service if not already done in the

Independently buildablestep above)docker-compose buildRunning the service

Note: Source the .env using the command

set -a && source .env && set +abefore running the below command.docker-compose -f docker-compose.yml -f docker-compose-dev.override.yml -f docker-compose.override.yml up -d

Camera Configurations¶

You need to make changes to the config.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) and the templates section of the pipeline.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/cameras/camera_source/pipeline.json) files while configuring cameras.

By default the pipeline.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/cameras/camera_source/pipeline.json) file has the RTSP camera configurations.

The camera configurations for the Edge Video Analytics Microservice module are as follows:

Note

source_parameters values in config.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) can get overriden if the required gstreamer source plugin in specified in template section of pipeline.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/cameras/camera_source/pipeline.json) files.

GenICam GigE or USB3 Cameras¶

Note

As Matrix Vision SDK([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/mvGenTL_Acquire-x86_64_ABI2-2.44.1.tgz) is being used with evaluation license, one would start seeing watermark after 200 ingested images when using a non Matrix Vision camera. One has to purchase the Matrix Vision license to remove this watermark or use a Matrix Vision camera or integrate the respective camera SDK(Eg: basler camera SDK for basler cameras).

For more information or configuration details for the GenICam GigE or the USB3 camera support, refer to the GenICam GigE/USB3.0 Camera Support.

Prerequisites for Working with the GenICam Compliant Cameras¶

The following are the prerequisites for working with the GeniCam compliant cameras.

Note

For other cameras such as RSTP, and USB (v4l2 driver compliant) revert the changes that are mentioned in this section. Refer to the following snip of the

ia_edge_video_analytics_microserviceservice, to add the required changes in the docker-compose.yml([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docker-compose.yml) file. After making the changes, before you build and run the services, ensure to run the builder.py([WORK_DIR]/IEdgeInsights/build/builder.py).

For GenICam GigE cameras:

Update the ETCD_HOST key with the current system’s IP in the .env([WORK_DIR]/IEdgeInsights/build/.env) file.

ETCD_HOST=<HOST_IP>

You need to add root user and network_mode: host in the docker-compose.yml([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docker-compose.yml) file and comment the sections networks and ports.

Make the following changes in the docker-compose.yml([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docker-compose.yml) file.

ia_edge_video_analytics_microservice:

# Add root user

user: root

# Add network mode host

network_mode: host

# Please make sure that the above commands are not added under the environment section and also take care about the indentations in the compose file.

...

environment:

...

# Add HOST_IP to no_proxy and ETCD_HOST

no_proxy: "<eii_no_proxy>,${RTSP_CAMERA_IP},<HOST_IP>"

ETCD_HOST: ${ETCD_HOST}

...

# Comment networks section will throw an error when network mode host is used.

# networks:

# - eii

# Comment ports section as following

# ports:

# - '65114:65114'

Configure visualizer app’s subscriber interfaces in the Multimodal Data Visualization Streaming’s config.json file as follows.

"interfaces": {

"Subscribers": [

{

"Name": "default",

"Type": "zmq_tcp",

"EndPoint": "<HOST_IP>:65114",

"PublisherAppName": "EdgeVideoAnalyticsMicroservice",

"Topics": [

"edge_video_analytics_results"

]

}

]

}

Note

Add <HOST_IP> to the no_proxy environment variable in the Multimodal Data Visualization Streaming visualizer’s docker-compose.yml file.

For GenIcam USB3.0 cameras:

Make the following changes to add root user in the docker-compose.yml([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docker-compose.yml) file.

ia_edge_video_analytics_microservice:

# Add root user

user: root

...

environment:

# Refer [GenICam GigE/USB3.0 Camera Support](/4.0/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docs/generic_plugin_doc.html) to install the respective camera SDK

# Setting GENICAM value to the respective camera/GenTL producer which needs to be used

GENICAM: "<CAMERA/GenTL>"

...

Note

If the GenICam cameras do not get initialized during the runtime, then on the host system, run the

docker system prunecommand. After that, remove the GenICam specific semaphore files from the/dev/shm/path of the host system. Thedocker system prunecommand will remove all the stopped containers, networks that are not used (by at least one container), any dangling images, and build cache which could prevent the plugin from accessing the device.If you get the

Feature not writablemessage while working with the GenICam cameras, then reset the device using the camera software or using the reset property of the Generic Plugin. For more information, refer the README.

Refer the following configuration for configuring the config.json(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file for GenICam GigE/USB3.0 cameras.// "source_parameters": { "element": "gencamsrc", "type": "gst" }, "pipeline": "cameras", "pipeline_version": "camera_source", "publish_frame": true, "model_parameters": {}, //

Make the following changes to the templates section of the pipeline.json(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/cameras/camera_source/pipeline.json)."type": "GStreamer", "template": [ "gencamsrc serial=<DEVICE_SERIAL_NUMBER> pixel-format=<PIXEL_FORMAT> width=<IMAGE_WIDTH> height=<IMAGE_HEIGHT> name=source", " ! videoconvert", " ! video/x-raw,format=BGR", " ! appsink name=destination" ],

Refer to the docs/basler_doc.md for more information/configuration on Basler camera.

Note:

Generic Plugin can work only with GenICam compliant cameras and only with gstreamer ingestor.

The above gstreamer pipeline was tested with Basler and IDS GigE cameras.

If

serialis not provided, then the first connected camera in the device list will be used.If

pixel-formatis not provided then the defaultmono8pixel format will be used.If

widthandheightproperties are not set then gencamsrc plugin will set the maximum resolution supported by the camera.By default,

exposure-autoproperty is set to on. If the camera is not placed under sufficient light then with auto exposure,exposure-timecan be set to very large value which will increase the time taken to grab frame. This can lead toNo frame received error. Hence it is recommended to manually set exposure as in the following sample pipeline when the camera is not placed under good lighting conditions.throughput-limitis the bandwidth limit for streaming out data from the camera(in bytes per second). Setting this property to a higher value might result in better FPS but make sure that the system and the application can handle the data load otherwise it might lead to memory bloat. Refer the below example pipeline to use the above mentioned properties:"type": "GStreamer", "template": [ "gencamsrc serial=<DEVICE_SERIAL_NUMBER> pixel-format=ycbcr422_8 width=1920 height=1080 exposure-time=5000 exposure-mode=timed exposure-auto=off throughput-limit=100000000 name=source", " ! videoconvert", " ! video/x-raw,format=BGR", " ! appsink name=destination" ],

While using the basler USB3.0 camera, ensure that the USBFS limit is set to atleast 256MB or more. You can verify this value by using command

cat /sys/module/usbcore/parameters/usbfs_memory_mb. If it is less than 256MB, then follow these steps to increase the USBFS value.

RTSP Cameras¶

Update the RTSP camera IP or the simulated source IP to the RTSP_CAMERA_IP variable in the .env([WORK_DIR]/IEdgeInsights/build/.env) file. Refer to the docs/rtsp_doc.md for information/configuration on RTSP camera.

Refer the following configuration for configuring the config.json(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file for RTSP camera.// "source_parameters": { "element": "rtspsrc", "type": "gst" }, "pipeline": "cameras", "pipeline_version": "camera_source", "publish_frame": true, "model_parameters": {}, //

Make the following changes to the templates section of the pipeline.json(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/cameras/camera_source/pipeline.json)."type": "GStreamer", "template": [ "rtspsrc location=\"rtsp://<USERNAME>:<PASSWORD>@<RTSP_CAMERA_IP>:<PORT>/<FEED>\" latency=100 name=source", " ! rtph264depay", " ! h264parse", " ! vaapih264dec", " ! vaapipostproc format=bgrx", " ! videoconvert ! video/x-raw,format=BGR", " ! appsink name=destination" ],

Note

The RTSP URI of the physical camera depends on how it is configured using the camera software. You can use VLC Network Stream to verify the RTSP URI to confirm the RTSP source.

USB v4l2 Cameras¶

For information or configurations details on the USB cameras, refer to docs/usb_doc.md.

Refer the following configuration for configuring the config.json(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file for USB v4l2 camera.// "source_parameters": { "element": "v4l2src", "type": "gst" }, "pipeline": "cameras", "pipeline_version": "camera_source", "publish_frame": true, "model_parameters": {}, //

Make the following changes to the templates section of the pipeline.json(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/cameras/camera_source/pipeline.json)."type": "GStreamer", "template": [ "v4l2src device=/dev/<DEVICE_VIDEO_NODE> name=source", " ! video/x-raw,format=YUY2", " ! videoconvert ! video/x-raw,format=BGR", " ! appsink name=destination" ],

Image Ingestion¶

The Image ingestion feature is responsible for ingesting the images coming from a directory into the EII stack for further processing. Image ingestion supports the following image formats:

Jpg

Jpeg

Jpe

Bmp

Png

Volume mount the image directory present on the host system. To do this, provide the absolute path of the images directory in the docker-compose file.

Refer the following snippet of the ia_edge_video_analytics_microservice service to add the required changes in the docker-compose.yml([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docker-compose.yml) file. After making the changes, ensure that the builder.py([WORK_DIR]/IEdgeInsights/build/builder.py) is executed before you build and run the services.

ia_edge_video_analytics_microservice:

...

volume:

- "/tmp:/tmp"

# volume mount the udev database with read-only permission,so the USB3 Vision interfaces can be enumerated correctly in the container

- "/run/udev:/run/udev:ro"

# Volume mount the directory in host system where the images are stored onto the container directory system.

# Eg: -"home/directory_1/images_directory:/home/pipeline-server/img_dir"

- "<relative or absolute path to images directory>:/home/pipeline-server/img_dir"

...

Refer the following snippet for configuring the config.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file for enabling the image ingestion feature for Jpg and Png images.

{

//

"model_parameters": {},

"pipeline": "cameras",

"pipeline_version": "camera_source",

"publish_frame": true,

"source": "gstreamer",

"source_parameters": {

"element": "multifilesrc",

"type": "gst"

},

}

For JPG Images

Refer to the following pipeline while using jpg,jpeg and jpe images and make changes to the pipeline.json(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/cameras/camera_source/pipeline.json) file."type": "GStreamer", "template": [ "multifilesrc location=\"/home/pipeline-server/img_dir/<image_filename>%02d.jpg\" index=1 name=source", " ! jpegdec ! decodebin", " ! videoconvert ! video/x-raw,format=BGR", " ! appsink name=destination" ],

For example: If the images are named in the format

frame_01,frame_02and so on, then use the following pipeline."type": "GStreamer", "template": [ "multifilesrc location=\"/home/pipeline-server/img_dir/frame_%02d.jpg\" index=1 name=source", " ! jpegdec ! decodebin", " ! videoconvert ! video/x-raw,format=BGR", " ! appsink name=destination" ],

Note

The images should follow a naming convention and should be named in the format characters followed by digits in the sequential order. For eg.

frame_001,frame_002,frame_003and so on.Make use of the

%dformat specifier to specify the total digits present in the image filename. For Eg. If the images are named in the formatframe_0001,frame_0002, then it has total 4 digits in the filename. Use%04dwhile providing the image name<image_filename>%04d.jpgin the pipeline.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/cameras/camera_source/pipeline.json) file.The ingestion will stop if it does not find the required image name. For eg. If directory contains images

frame_01,frame_02andframe_04, then the ingestion will stop after readingframe_02sinceframe_03is not present in the directory.Make use of images having resolution -

720×480,1280×720,1920×1080,3840×2160and1920×1200. If a different resolution image is used then the EdgeVideoAnalytics service might fail withreshapeerror as gstreamer does zero padding to that image.Make sure that the images directory is having the required read and execute permission. If not use the following command to add the permissions.

sudo chmod -R 755 <path to images directory>

For PNG Images

Refer to the follwoing pipeline while using png images and make changes to the pipeline.json(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/cameras/camera_source/pipeline.json) file."type": "GStreamer", "template": [ "multifilesrc location=\"/home/pipeline-server/img_dir/<image_filename>%03d.png\" index=1 name=source", " ! pngdec ! decodebin", " ! videoconvert ! video/x-raw,format=BGR", " ! appsink name=destination" ],

Note

It is recommended to set the loop property of the multifilesrc element to false loop=FALSE to avoid memory leak issues.

For BMP Images

Refer to the following snippet for configuring the config.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file for enabling the image ingestion feature for bmp image.

{

//

"model_parameters": {},

"pipeline": "cameras",

"pipeline_version": "camera_source",

"publish_frame": true,

"source": "gstreamer",

"source_parameters": {

"element": "imagesequencesrc",

"type": "gst"

},

}

Refer to the following pipeline while using bmp images and make changes to the pipeline.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/cameras/camera_source/pipeline.json) file.

"type": "GStreamer",

"template": [

"imagesequencesrc location=/home/pipeline-server/img_dir/<image_filename>%03d.bmp start-index=1 framerate=1/1",

" ! decodebin",

" ! videoconvert ! video/x-raw,format=BGR",

" ! appsink name=destination"

],

Path Specification for images:

Considering folder name as ‘images’ where the images are stored.

Relative Path: "./images:/home/pipeline-server/img_dir" (or) "${PWD}/images:/home/pipeline-server/img_dir"

Absolute Path: "/home/ubuntu/images:/home/pipeline-server/img_dir"

Integrate Python UDF with EdgeVideoAnalyticsMicroservice Service¶

You can integrate any python UDF with EdgeVideoAnalyticsMicroservice using the volume mount method. You can follow the steps to integrate the python UDF:

- Volume mount the python UDF.

You need to provide absolute or relative path to the python UDF and the video file in the

docker-compose.ymlfile. Refer the following snippet to volume mount the python UDF using the docker-compose.yml([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docker-compose.yml) file.

ia_edge_video_analytics_microservice: ... volumes: - ../EdgeVideoAnalyticsMicroservice/eii/pipelines:/home/pipeline-server/pipelines/ - ../EdgeVideoAnalyticsMicroservice/resources:/home/pipeline-server/resources/ - <absolute or relative path to the python_udf>:/home/pipeline-server/eva_udfs/<python_udf> ... For example. If you want to use the safety_gear(\ ``[WORK_DIR]/IEdgeInsights/CustomUdfs/PySafetyGearAnalytics/safety_gear``\ ) UDF, then you need to volume mount this UDF. The structure of safety_gear UDF is as show:

safety_gear/ |-- __init__.py |-- ref | |-- frozen_inference_graph.bin | |-- frozen_inference_graph.xml | |-- frozen_inference_graph_fp16.bin | `-- frozen_inference_graph_fp16.xml `-- safety_classifier.py Refer to the following snippet to volume mount the safety_gear UDF.

ia_edge_video_analytics_microservice: ... volumes: - ../EdgeVideoAnalyticsMicroservice/eii/pipelines:/home/pipeline-server/pipelines/ - ../EdgeVideoAnalyticsMicroservice/resources:/home/pipeline-server/resources/ - /home/IEdgeInsights/CustomUdfs/PySafetyGearAnalytics/safety_gear:/home/pipeline-server/eva_udfs/safety_gear ...

Start cvlc based RTSP stream

Install VLC if not installed already:

sudo apt install vlcIn order to use the RTSP stream from cvlc, the RTSP server must be started using VLC with the following command:

cvlc -vvv file://<absolute_path_to_video_file> --sout '#gather:rtp{sdp=rtsp://<SOURCE_IP>:<PORT>/<FEED>}' --loop --sout-keep

Note

<FEED> in the cvlc command can be live.sdp or it can also be avoided. But make sure the same RTSP URI given here is used in the ingestor pipeline config.

For example, Refer to the command to start a cvlc based RTSP stream for safety gear video file([WORK_DIR]/IEdgeInsights/CustomUdfs/PySafetyGearIngestion/Safety_Full_Hat_and_Vest.avi).

cvlc -vvv file:///home/IEdgeInsights/CustomUdfs/PySafetyGearIngestion/Safety_Full_Hat_and_Vest.avi --sout '#gather:rtp{sdp=rtsp://<SOURCE_IP>:8554/live.sdp}' --loop --sout-keep

- Configure the pipeline.json(

[WORK_DIR]/IEdgeInsights/pipeline.json) You need to update the

templatessection and provide the path to the video file in the pipeline.json([WORK_DIR]/IEdgeInsights/pipeline.json). Refer to the example to configure the pipeline.json file.

{ "type": "GStreamer", "template": [ "rtspsrc location=\"rtsp://<SOURCE_IP>:<PORT>/<FEED>\" latency=100 name=source", " ! rtph264depay", " ! h264parse", " ! vaapih264dec", " ! vaapipostproc format=bgrx", " ! videoconvert ! video/x-raw,format=BGR", " ! udfloader name=udfloader", " ! appsink name=destination" ], "description": "EII UDF pipeline", "parameters": { "type": "object", "properties": { "udfloader": { "element": { "name": "udfloader", "property": "config", "format": "json" }, "type": "object" } } } } **Note:** Make sure ``parameters`` tag is added while using udfloader element.

- Configure the pipeline.json(

Configure the config.json(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) file.You need to configure the

udfssection in theconfig.jsonfile as shown:You need to configure the

source_parameterssection and theudfssection in theconfig.jsonfile as shown below.main

{ "source_parameters": { "element": "rtspsrc", "type": "gst" }, // "udfs": [ { "name": "<path to python udf>", "type": "python", "device": "CPU", "model_xml": "<path to model xml file>", "model_bin": "<path to model bin file>" } ] // } The following example shows the configuration for safety_gear UDF.

{ "source_parameters": { "element": "rtspsrc", "type": "gst" }, // "udfs": [ { "name": "eva_udfs.safety_gear.safety_classifier", "type": "python", "device": "CPU", "model_xml": "./eva_udfs/safety_gear/ref/frozen_inference_graph.xml", "model_bin": "./eva_udfs/safety_gear/ref/frozen_inference_graph.bin" } ] // }

**Note:**

- One can add custom udfs to eva_udfs(`[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eva_udfs`) directory as this path is volume mounted to the EVAM service. Refer the above example to add a custom udf.

- Geti udf takes the path of the deployment directory as the input for deploying a project for local inference. Refer the below example to see how the path of the deployment directory is specified in the udf config. As mentioned in the above steps make sure all the required resources are volume mounted to the EVAM service.

The following example shows the configuration for geti UDF.

```javascript

{

"source_parameters": {

"element": "rtspsrc",

"type": "gst"

},

//

"udfs": [

{

"type": "python",

"name": "<path to python geti udf>",

"device": "CPU",

"visualize": "true",

"deployment": "<path to geti deployment directory>"

}

]

//

}

```

Refer [geti udf readme](/4.0/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eva_udfs/geti_udf/README.html) for more details.

After making the changes, ensure that the builder.py(`[WORK_DIR]/IEdgeInsights/build/builder.py`) script is executed before you build and run the services.

Running EdgeVideoAnalyticsMicroservice with multi-instance¶

EdgeVideoAnalyticsMicroservice supports running multi-instance with EII stack, you can run the following command to generate the multi-instance boiler plate config for any number of streams of video-streaming-evam use case:

python3 builder.py -f usecases/video-streaming-evam.yml -v <number_of_streams_required>

A sample example config on how to connect Multimodal Data Visualization and Multimodal Data Visualization Streaming with EdgeVideoAnalyticsMicroservice is given below. Please ensure to update both the configs of Multimodal Data Visualization and Multimodal Data Visualization Streaming services with these changes:

{

"interfaces": {

"Subscribers": [

{

"Name": "default",

"Type": "zmq_tcp",

"EndPoint": "ia_edge_video_analytics_microservice:65114",

"PublisherAppName": "EdgeVideoAnalyticsMicroservice",

"Topics": [

"edge_video_analytics_results"

]

}

]

}

}

Note

While using multi-instance feature, you need to update the config.json files and docker-compose files in the [WORK_DIR]/IEdgeInsights/build/multi_instance directory and the pipeline.json files present in the pipelines([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/pipelines/) directory.

Running EdgeVideoAnalyticsMicroservice with EII helm usecase¶

Please refer to README

Running EdgeVideoAnalyticsMicroservice on a GPU device¶

EdgeVideoAnalyticsMicroservice supports running inference only on CPU and GPU devices by accepting the device value (“CPU”|”GPU”), part of the udf object configuration in the udfs key.

The device field in the UDF config of udfs key in the EdgeVideoAnalyticsMicroservice configs([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) needs to be updated.

To Run on Intel(R) Processor Graphics (GPU/iGPU)¶

At runtime, use the root user permissions to run inference on a GPU device. To enable root user at runtime in ia_edge_video_analytics_microservice, add user: root in the docker-compose.yml([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docker-compose.yml) file.

Refer the following example:

ia_edge_video_analytics_microservice:

...

user: root

Note

EdgeVideoAnalyticsMicroservice does not support running inference on VPU -

MYRIAD (NCS2)andHDDLdevices.If you get a

Failed to create plugin for device GPU/ clGetPlatformIDs errormessage, then check if the host system supports GPU device. Install the required drivers from OpenVINO-steps-for-GPU. Certain platforms like TGL can have compatibility issues with the Ubuntu kernel version. Ensure the compatible kernel version is installed.“EdgeVideoAnalyticsMicroservice by default runs the video ingestion and analytics pipeline in a single micro-service for which the interface connection configuration is as below.

"Publishers": [

{

"Name": "default",

"Type": "zmq_tcp",

"EndPoint": "0.0.0.0:65114",

"Topics": [

"edge_video_analytics_results"

],

"AllowedClients": [

"*"

]

}

“EdgeVideoAnalyticsMicroservice” can be configured to: run only with video ingestion capability by just adding the below Publisher section (The publisher config remains same as above) :

"Publishers": [ { "Name": "default", "Type": "zmq_tcp", "EndPoint": "0.0.0.0:65114", "Topics": [ "camera1_stream" ], "AllowedClients": [ "*" ] }

“EdgeVideoAnalyticsMicroservice” can be configured to: run only with video analytics capability by just adding the below Subscriber section.

"Subscribers": [

{

"Name": "default",

"Type": "zmq_ipc",

"EndPoint": "/EII/sockets",

"PublisherAppName": "VideoIngestion",

"Topics": [

"camera1_stream_results"

],

"zmq_recv_hwm": 50

}

]

The “source” parameter in the config.json needs to be updated to EII Messagebus since “EdgeVideoAnalyticsMicroservice” when running with video analytics capability need to get its data from the EII messagebus.

{

"config": {

"source": "msgbus",

Use Human Pose Estimation UDF with EdgeVideoAnalyticsMicroservice¶

To use the Human Pose Estimation([WORK_DIR]/IEdgeInsights/) UDF, make the following changes to the config.json([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) and docker-compose([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docker-compose.yml) file.

Configure the config.json(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/config.json) and make changes to thepipelineandpipeline_version.

"pipeline": "object_detection",

"pipeline_version": "human_pose_estimation",

"publish_frame": true,

Volume mount the Human Pose Estimation UDF in the docker-compose.yml(

[WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docker-compose.yml) file.

volumes:

- ../EdgeVideoAnalyticsMicroservice/resources:/home/pipeline-server/resources/

- ../EdgeVideoAnalyticsMicroservice/models_list/human_pose_estimation:/home/pipeline-server/models/human_pose_estimation

Note

Use the following volume mount path in docker-compose.yml([WORK_DIR]/IEdgeInsights/EdgeVideoAnalyticsMicroservice/eii/docker-compose.yml) file while independently deploying EdgeVideoAnalyticsMicroservice.

../models_list/human_pose_estimation:/home/pipeline-server/models/human_pose_estimation

EII UDFLoader Overview¶

UDFLoader is a library providing APIs for loading and executing native and python UDFs.

Dependency Installation¶

UDFLoader depends on the following libraries. Follow the documentation to install the libraries:

OpenCV - Run

source /opt/intel/openvino/bin/setupvars.shcommandPython3 Numpy package

Compilation¶

Utilizes CMake as the build tool for compiling the library. The simplest sequence of commands for building the library are shown below.

mkdir build

cd build

cmake ..

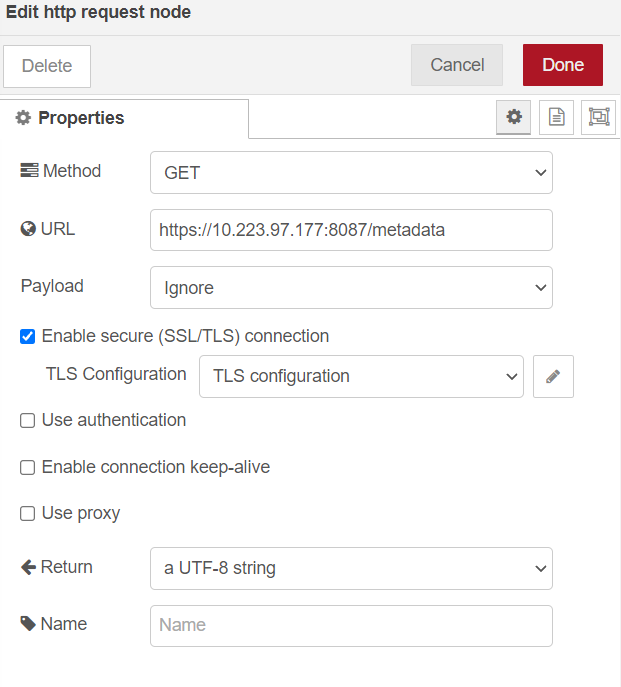

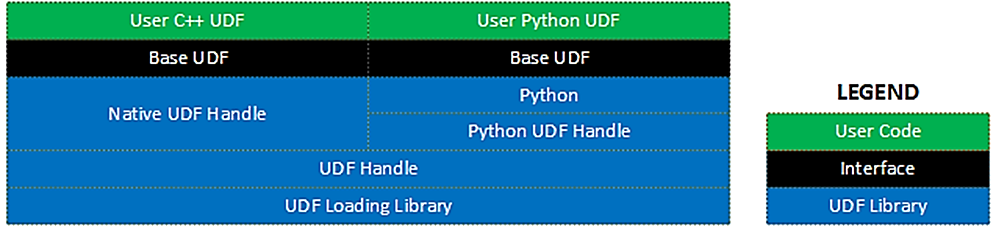

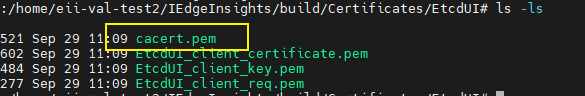

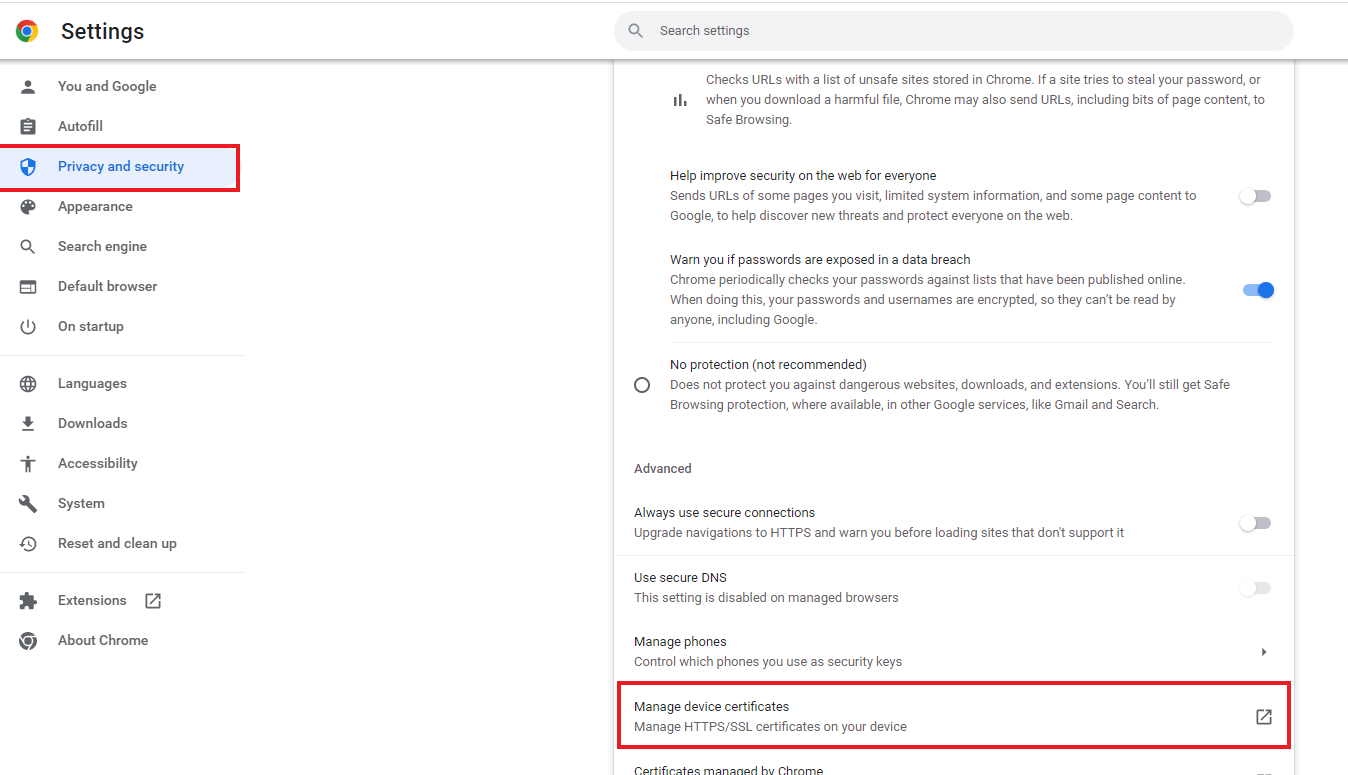

make