EII Tools¶

EII stack has below set of tools which run as containers too:

Time Series Benchmarking Tool¶

These scripts are designed to automate the running of benchmarking tests and the collection of the performance data. This performance data includes the Average Stats of each data stream, and also the CPU%, Memory%, and Memory Read/Write bandwidth.

The Processor Counter Monitor (PCM) is required for measuring memory read/write bandwidth, which can be downloaded and built here: https://github.com/opcm/pcm

If you do not have PCM on your system, those values will be blank in the output.ppc

Steps for running a benchmarking test case:

Configure **TimeSeriesProfiler README.md** file to recieve rfc_results according to **TimeSeriesProfiler README.md**.

change the “command” option in MQTT publisher **docker-compose.yml to:

["--topic", "test/rfc_data", "--json", "./json_files/*.json", "--streams", "<streams>"]

For example:

["--topic", "test/rfc_data", "--json", "./json_files/*.json", "--streams", "1"]

Run execute test to execute the time series test case.Before running following command, make sure that “export_to_csv” value in **TimeSeriesProfiler config.json** is set to “True”:

USAGE: ./execute_test.sh TEST_DIR STREAMS SLEEP PCM_HOME [EII_HOME] WHERE: TEST_DIR - Directory containing services.yml and config files for influx, telegraf, and kapacitor STREAMS - The number of streams (1, 2, 4, 8, 16) SLEEP - The number of seconds to wait after the containers come up PCM_HOME - The absolute path to the PCM repository where pcm.x is built [EII_HOME] - [Optional] Absolut path to EII home directory, if running from a non-default location

For example:

sudo -E ./execute_test.sh $PWD/samples 2 10 /opt/intel/pcm /home/intel/IEdgeInsights

The execution log, performance logs, and the output.ppc will be saved in TEST_DIR/output/< timestamp >/ so that the same test case can be ran multiple times without overwriting the output. You can see if the test ocurred any errors in the execution.log, and you can see the results of a successful test in output.ppc

The timeseries profiler output file (named “avg_latency_Results.csv” ) will be stored in TEST_DIR/output/< timestamp >/.

Video Benchmarking Tool¶

These scripts are designed to automate the running of benchmarking tests and the collection of the performance data. This performance data includes the FPS of each video stream, and also the CPU%, Memory%, and Memory Read/Write bandwidth.

The Processor Counter Monitor (PCM) is required for measuring memory read/write bandwidth, which can be downloaded and built here: https://github.com/opcm/pcm

If you do not have PCM on your system, those columns will be blank in the output.csv.

Note

:

For running the gstreamer pipeline mentioned in sample_test/config.json one needs to copy the required model files to [WORKDIR]/IEdgeInsights/VideoIngestion/models by referring sample_test/config.json.

For using

MYRIADdevice for inference please adduser: rootin docker-compose.yml file for VI. If IPC mode is used thenuser: rootneeds to be added for VideoProfiler docker-compose.yml file as well.The docker-compose.yml files of VI and VideoProfiler gets picked from their respective repos. So any changes needed should be applied in their repsective repos.

Refer README-Using-video-accelerators for using video accelerators.

Steps for running a benchmarking test case:

Please ensure the VideoProfiler requirements are installed by following the README at README

Start RTSP server on a sepereate system on the network:

$ ./stream_rtsp.sh <number-of-streams> <starting-port-number> <bitrate> <width> <height> <framerate>

For example:

$ ./stream_rtsp.sh 16 8554 4096 1920 1080 30

Run execute_test.sh with the desired benchmarking config:

USAGE: ./execute_test.sh TEST_DIR STREAMS SLEEP PCM_HOME [EII_HOME] WHERE: TEST_DIR - The absolute path to directory containing services.yml for the services to be tested, and the config.json and docker-compose.yml for VI and VA if applicable. STREAMS - The number of streams (1, 2, 4, 8, 16) SLEEP - The number of seconds to wait after the containers come up PCM_HOME - The absolute path to the PCM repository where pcm.x is built EII_HOME - [Optional] The absolute path to EII home directory, if running from a non-default location

For example:

sudo -E ./execute_test.sh $PWD/sample_test 16 60 /opt/intel/pcm /home/intel/IEdgeInsights

The execution log, performance logs, and the output.csv will be saved in TEST_DIR/< timestamp >/ so that the same test case can be ran multiple times without overwriting the output. You can see if the test ocurred any errors in the execution.log, and you can see the results of a successful test in output.csv.

DiscoverHistory tool¶

You can get history metadata and images from the InfluxDB and ImageStore containers using the DiscoverHistory tool.

Build and run the DiscoverHistory tool¶

This section provides information for building and running DiscoverHistory tool in various modes such as the PROD mode and the DEV mode. To run the DiscoverHistory tool base images should be on the same node. Ensure that on the node where the DiscoverHistory tool is running, the ia_common and ia_eiibase base images are also available. For scenario, where EII and DiscoverHistory tool are not running on the same node, then you must build the base images, ia_common and ia_eiibase.

Prerequisites¶

As a prerequisite to run the DiscoverHistory tool, a set of config, interfaces, public, and private keys should be present in etcd. To meet the prerequisite, ensure that an entry for the DiscoverHistory tool with its relative path from the [WORK_DIR]/IEdgeInsights] directory is set in the video-streaming-storage.yml file in the [WORK_DIR]/IEdgeInsights/build/usecases/ directory. For more information, see the following example:

AppContexts:

- VideoIngestion

- VideoAnalytics

- Visualizer

- WebVisualizer

- tools/DiscoverHistory

- ImageStore

- InfluxDBConnector

Run the DiscoverHistory tool in the PROD mode¶

After completing the prerequisites, perform the following steps to run the DiscoverHistory tool in the PROD mode:

Open the

config.jsonfile.

#. Enter the query for InfluxDB. #.

Run the following command to generate the new

docker-compose.ymlthat includes DiscoverHistory:python3 builder.py -f usecases/video-streaming-storage.yml

Provision, build, and run the DiscoverHistory tool along with the EII video-streaming-storage recipe or stack. For more information, refer to the EII README.

Check if the

imagestoreandinfluxdbconnectorservices are running.Locate the

dataand theframesdirectories from the following path:/opt/intel/eii/tools_output. ..Note: The

framesdirectory will be created only ifimg_handleis part of the select statement.

#. Use the ETCDUI to change the query in the configuration. #.

Run the following command to start container with new configuration:

docker restart ia_discover_history

Run the DiscoverHistory tool in the DEV mode¶

After completing the prerequisites, perform the following steps to run the DiscoverHistory tool in the DEV mode:

Open the [.env] file from the

[WORK_DIR]/IEdgeInsights/builddirectory.Set the

DEV_MODEvariable astrue.

Run the DiscoverHistory tool in the zmq_ipc mode¶

After completing the prerequisites, to run the DiscoverHistory tool in the zmq_ipc mode, modify the interface section of the config.json file as follows:

{

"type": "zmq_ipc",

"EndPoint": "/EII/sockets"

}

Sample select queries¶

The following table shows the samples for the select queries and its details:

Note Include the following parameters in the query to get the good and the bad frames:

The following examples shows how to include the parameters:

“select img_handle, defects, encoding_level, encoding_type, height, width, channel from camera1_stream_results order by desc limit 10”

“select * from camera1_stream_results order by desc limit 10”

Multi-instance feature support for the Builder script with the DiscoverHistory tool¶

The multi-instance feature support of Builder works only for the video pipeline ([WORK_DIR]/IEdgeInsights/build/usecase/video-streaming.yml). For more details, refer to the EII core readme

In the following example you can view how to change the configuration to use the builder.py script -v 2 feature with 2 instances of the DiscoverHistory tool enabled:

Contents

EmbPublisher¶

This tool acts as a brokered publisher of EII messagebus.

Telegaf’s eii messagebus input plugin acts as a subscriber to the EII broker.

How to integrate this tool with video/timeseries use case.¶

In ‘time-series.yml’/’video-streaming.yml’ file, please add ‘ZmqBroker’ and ‘tools/EmbPublisher’ components.

Use the modified ‘time-series.yml’/’video-streaming.yml’ file as an argument while generating the docker-compose.yml file using the ‘builder.py’ utility.

Follow usual provisioning and starting process.

Configuration of the tool.¶

Let us look at the sample configuration

{

"config": {

"pub_name": "TestPub",

"msg_file": "data1k.json",

"iteration": 10,

"interval": "5ms"

},

"interfaces": {

"Publishers": [

{

"Name": "TestPub",

"Type": "zmq_tcp",

"AllowedClients": [

"*"

],

"EndPoint": "ia_zmq_broker:60514",

"Topics": [

"topic-pfx1",

"topic-pfx2",

"topic-pfx3",

"topic-pfx4"

],

"BrokerAppName" : "ZmqBroker",

"brokered": true

}

]

}

}

-pub_name : The name of the publisher in the interface.

-topics: The name of the topics seperated by comma, for which publisher need to be started.

-msg_file : The file containing the JSON data, which represents the single data point (files should be kept into directory named ‘datafiles’).

-num_itr : The number of iterations

-int_btw_itr: The interval between any two iterations

Running EmbPublisher in IPC mode¶

User needs to modify interface section of **config.json** to run in IPC mode as following

"interfaces": {

"Publishers": [

{

"Name": "TestPub",

"Type": "zmq_ipc",

"AllowedClients": [

"*"

],

"EndPoint": {

"SocketDir": "/EII/sockets",

"SocketFile": "frontend-socket"

},

"Topics": [

"topic-pfx1",

"topic-pfx2",

"topic-pfx3",

"topic-pfx4"

],

"BrokerAppName" : "ZmqBroker",

"brokered": true

}

]

}

Contents

EmbSubscriber¶

EmbSubscriber subscribes message coming from a publisher.It subscribes to messagebus topic to get the data.

EII pre-requisites¶

EmbSubscriber expects a set of config, interfaces & public private keys to be present in ETCD as a pre-requisite. To achieve this, please ensure an entry for EmbSubscriber with its relative path from IEdgeInsights directory is set in the time-series.yml file present in IEdgeInsights directory. An example has been provided below:

AppName: - Grafana - InfluxDBConnector - Kapacitor - Telegraf - tools/EmbSubscriber

With the above pre-requisite done, please run the below command:

$ cd [WORKDIR]/IEdgeInsights/build $ python3 builder.py -f usecases/time-series.yml

Running EmbSubscriber¶

Refer ../README.md to provision, build and run the tool along with the EII time-series recipe/stack.

Running EmbSubscriber in IPC mode¶

User needs to modify interface section of **config.json** to run in IPC mode as following

{

"config": {},

"interfaces": {

"Subscribers": [

{

"Name": "TestSub",

"PublisherAppName": "Telegraf",

"Type": "zmq_ipc",

"EndPoint": {

"SocketDir": "/EII/sockets",

"SocketFile": "telegraf-out"

},

"Topics": [

"*"

]

}

]

}

}

Contents

EII GigEConfig tool¶

The GigEConfig tool can be used to read the Basler Camera properties from the Pylon Feature Stream file and construct a gstreamer pipeline with the required camera features. The gstreamer pipeline which is generated by the tool can either be printed on the console or can be used to update the config manager storage.

Note:This tool has been verified with Basler camera only as the PFS file which is a pre-requisite to this tool is specific to Basler Pylon Camera Software Suite.

Generating PFS(Pylon Feature Stream) File¶

In order to execute this tool user has to provide a PFS file as a pre-requisite. The PFS file can be generated using the Pylon Viwer application for the respective Basler camera by following below steps:-

Refer the below link to install and get an overview of the Pylon Viewer application:- https://docs.baslerweb.com/overview-of-the-pylon-viewer

Execute the below steps to run the Pylon Viewer application:

$ sudo <PATH>/pylon5/bin/PylonViewerApp

Using the Pylon Viewer application follow below steps to gererate a PFS file for the required camera.

Select the required camera and open it

Configure the camera with the required settings if required

On the application toolbar, select Camera tab-> Save Features..

Close the camera

Note:In case one needs to configure the camera using Pylon Viewer make sure the device is not used by another application as it can be controlled by only one application at a time.

Running GigEConfig Tool¶

Before executing the tool make sure following steps are executed:-

Please make sure that provisioning is done.

Source build/.env to get all the required ENVs

$ set -a $ source [WORKDIR]/IEdgeInsights/build/.env $ set +a

Install the dependencies:

Note: It is highly recommended that you use a python virtual environment to install the python packages, so that the system python installation doesn’t get altered. Details on setting up and using python virtual environment can be found here: https://www.geeksforgeeks.org/python-virtual-environment/

$ pip3 install -r requirements.txt

If using GigE tool in PROD mode, make sure to set required permissions to certificates.

sudo chmod -R 755 [WORKDIR]/IEdgeInsights/build/provision/Certificates Note : This step is required everytime provisioning is done. Caution: This step will make the certs insecure. Please do not do it on a production machine.

Usage of GigEConfig tool:¶

Script Usage:

$ python3 GigEConfig.py --help

$ python3 GigEConfig.py [-h] --pfs_file PFS_FILE [--etcd] [--ca_cert CA_CERT]

[--root_key ROOT_KEY] [--root_cert ROOT_CERT]

[--app_name APP_NAME] [-host HOSTNAME] [-port PORT]

Tool for updating pipeline according to user requirement

optional arguments:

- -h, --help

show this help message and exit

—pfs_file PFS_FILE, -f PFS_FILE To process pfs file genrated by PylonViwerApp (default: None)

- --etcd, -e

Set for updating etcd config (default: False)

—ca_cert CA_CERT, -c CA_CERT Provide path of ca_certificate.pem (default: None)

- –root_key ROOT_KEY, -r_k ROOT_KEY

Provide path of root_client_key.pem (default: None)

—root_cert ROOT_CERT, -r_c ROOT_CERT Provide path of root_client_certificate.pem (default: None)

- --app_name APP_NAME, -a APP_NAME

For providing appname of VideoIngestion instance (default: VideoIngestion)

—hostname HOSTNAME, -host HOSTNAME Etcd host IP (default: localhost)

- –port PORT, -port PORT

Etcd host port (default: 2379)

config.json consist of mapping between the PFS file elements and the camera properties. The pipeline constructed will only consist of the elements specified in it.

The user needs to provide following elements:-

pipeline_constant: Specify the constant gstreamer element of pipeline.

plugin_name: The name of the gstreamer source plugin used

device_serial_number: Serial number of the device to which the plugin needs to connect to:

plugin_properties: Properties to be integrated in pipeline, The keys in here are mapped to respective gstreamer properties

Execution of GigEConfig¶

The tool can be executed in following manner :-

$ cd [WORKDIR]/IEdgeInsights/tools/GigEConfig

Modify config.json based on the requirements

In case etcd configuration needs to be updated.

For DEV Mode

$ python3 GigEConfig.py --pfs_file <path to pylon's pfs file> -e b. For PROD Mode Before running in PROD mode please change the permissions of the certificates i.e :-

$sudo chmod 755 -R [WORDK_DIR]/IEdgeInsights/build/provision/Certificates

$ python3 GigEConfig.py -f <path to pylon's pfs file> -c [WORK_DIR]/IEdgeInsights/build/provision/Certificates/ca/ca_certificate.pem -r_k [WORK_DIR]/IEdgeInsights/build/provision/Certificates/root/root_client_key.pem -r_c [WORK_DIR]IEdgeInsights/build/provision/Certificates/root/root_client_certificate.pem -e

In case only pipeline needs to be printed.

$ python3 GigEConfig.py --pfs_file <path to pylon's pfs file>

In case host or port is needed to be specified for etcd.

For DEV Mode

$ python3 GigEConfig.py --pfs_file <path to pylon's pfs file> -e -host <etcd_host> -port <etcd_port> b. For PROD Mode Before running in PROD mode please change the permissions of the certificates i.e :

$sudo chmod 755 -R [WORDK_DIR]/IEdgeInsights/build/provision/Certificates

$ python3 GigEConfig.py -f <path to pylon's pfs file> -c [WORK_DIR]/IEdgeInsights/build/provision/Certificates/ca/ca_certificate.pem -r_k [WORK_DIR]/IEdgeInsights/build/provision/Certificates/root/root_client_key.pem -r_c [WORK_DIR]IEdgeInsights/build/provision/Certificates/root/root_client_certificate.pem -e -host <etcd_host> -port <etcd_port>

HttpTestServer¶

HttpTestServer runs a simple HTTP Test server with security being optional.

Pre-requisites for running the HttpTestServer¶

To install EII libs on bare-metal, follow the README of eii_libs_installer.

Generate the certificates required to run the Http Test Server using the following command

$ ./generate_testserver_cert.sh test-server-ip

Starting HttpTestServer¶

Please run the below command to start the HttpTestServer

$ cd IEdgeInsights/tools/HttpTestServer $ go run TestServer.go --dev_mode false --host <address of test server> --port <port of test server> --rdehost <address of Rest Data Export server> --rdeport <port of Rest Data Export server>

Eg: go run TestServer.go --dev_mode false --host=0.0.0.0 --port=8082 --rdehost=localhost --rdeport=8087 **\ *NOTE*\ **\ : server_cert.pem is valid for 365 days from the day of generation

In PROD mode, you might see intermediate logs like this:

$ http: TLS handshake error from 127.0.0.1:51732: EOF These logs are because of RestExport trying to check if the server is present by pinging it without using any certs and can be ignored.

Contents

Jupyter Notebook usage to develop python UDFs¶

UDF development in python can be done using the web based IDE of Jupyter Notebook. Jupyter Notebook is an open-source web application that allows you to create and share documents that contain live code, equations, visualizations and narrative text. Uses include: data cleaning and transformation, numerical simulation, statistical modeling, data visualization, machine learning, and much more.

This tool acts as an interface between user and Jupyter Notebook service allowing the user to interact with Jupyter Notebook to write, edit, experiment and create python UDFs.

It works along with the jupyter_connector UDF for enabling the IDE for udf development.

Jupyter Notebook pre-requisites¶

Jupyter Notebook expects a set of config, interfaces & public private keys to be present in ETCD as a pre-requisite.

To achieve this, please ensure an entry for Jupyter Notebook with its relative path from IEdgeInsights directory is set in any of the .yml files present in IEdgeInsights directory.

An example has been provided below to add the entry in video-streaming.yml .. code-block:: yml

AppContexts: —snip— - tools/JupyterNotebook

Ensure the jupyter_connector UDF is enabled in the config of either VideoIngestion or VideoAnalytics to be connected to JupyterNotebook. An example has been provided here for connecting VideoIngestion to JupyterNotebook, the config to be changed being present at config.json:

{ "config": { "encoding": { "type": "jpeg", "level": 95 }, "ingestor": { "type": "opencv", "pipeline": "./test_videos/pcb_d2000.avi", "loop_video": true, "queue_size": 10, "poll_interval": 0.2 }, "sw_trigger": { "init_state": "running" }, "max_workers":4, "udfs": [{ "name": "jupyter_connector", "type": "python", "param1": 1, "param2": 2.0, "param3": "str" }] } }

Running Jupyter Notebook¶

With the above pre-requisite done, please run the below command:

python3 builder.py -f usecases/video-streaming.yml

Refer IEdgeInsights/README.md to provision, build and run the tool along with the EII recipe/stack.

Run the following command to see the logs:

docker logs -f ia_jupyter_notebook

Copy paste the URL (along with the token) from the above logs in a browser. Below is a sample URL

http://127.0.0.1:8888/?token=5839f4d1425ecf4f4d0dd5971d1d61b7019ff2700804b973Replace the ‘127.0.0.1’ IP address with host IP, if you are accessing the server remotely.

Once the Jupyter Notebook service is launched in the browser, run the main.ipynb file visible in the list of files available.

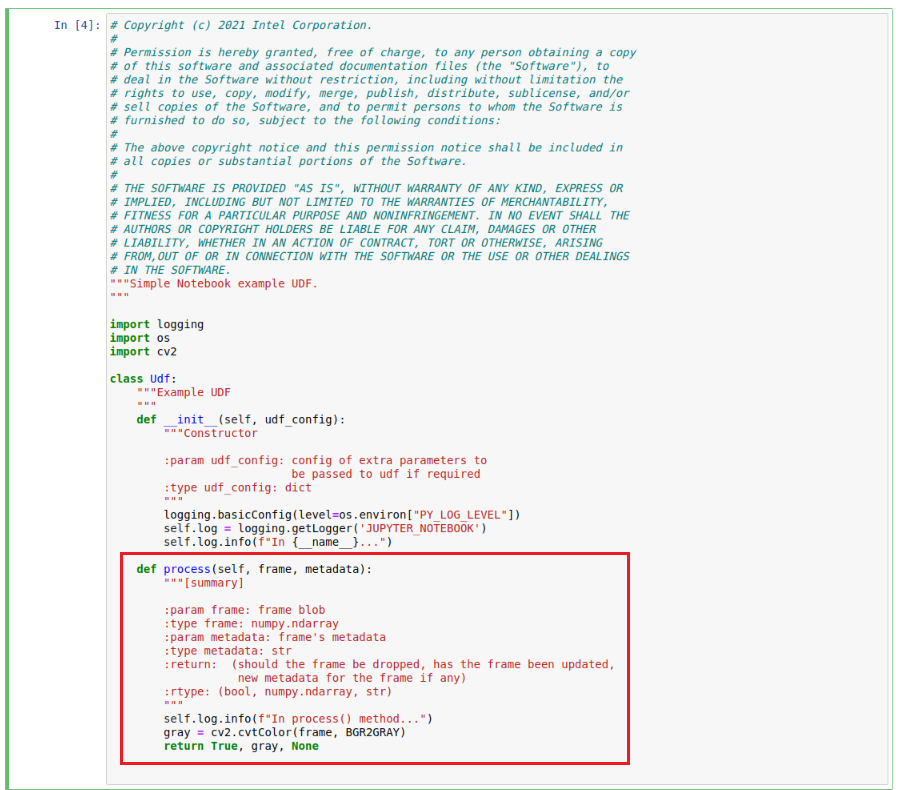

The process method of udf_template.ipynb file available in the list of files can be altered and re-run to experiment and test the UDF.

If any parameters are to be sent to the custom udf by the user, they can be added in the jupyter_connector UDF config provided to either VideoIngestion or VideoAnalytics and can be accessed in the udf_template.ipynb constructor in the udf_config parameter which is a dict containing all these parameters. A sample UDF for reference has been provided at udf_template.ipynb.

Note: After altering/creating a new udf, run main.ipynb and restart VideoIngestion or VideoAnalytics with which you have enabled jupyter notebook service.

Once the user is satisfied with the functionality of the UDF, the udf can be saved/exported by clicking on the Download as option and selecting (.py) option. The downloaded udf can then be directly used by placing it in the ../../common/video/udfs/python directory or can be integrated and used with CustomUDFs.

Note:

JupyterNotebook is not to be used with CustomUDFs like GVASafetyGearIngestion since they are specific to certain usecases only. Instead, the VideoIngestion pipeline can be modified to use GVA ingestor pipeline and config can be modifed to use jupyter_connector UDF.

A sample opencv udf template is provided at opencv_udf_template.ipynb to serve as an example for how a user can write an OpenCV UDF and modify it however required. This sample UDF uses OpenCV APIs to write a sample text on the frames, which can be visualized in the Visualizer display. Please ensure encoding is disabled when using this UDF since encoding enabled automatically removes the text added onto the frames.

Creating UDF in Jupyter Notebook¶

After running main.ipynb , select udf_template.ipynb to start creating our own python UDF.

Edit the process method in the Udf class

Run the cell

Check the output on the visualizer

Contents

MQTT publisher¶

MQTT publisher is a tool to help publish the sample sensor data.

Usage¶

Note

This assumes you have already installed and configured Docker.

Building and bringing up the tool

Run the broker (Use

docker psto see if the broker has started successfully as the container starts in detached mode)$ ./broker.sh <port>

NOTE: To run the broker for EII TimeSeries Analytics usecase:

$ ./broker.sh 1883Start the mqtt-publisher

$ cd publisher $ set -a $ source ../../../build/.env $ set +a $ docker-compose build $ docker-compose up -d

Note By default the tool publishes temperature data. If the user wants to publish other data, he/she needs to modify the command option in “ia_mqtt_publisher” service in docker-compose.yml accordingly and recreate the container using docker-compose up -d command from publisher directory.

To publish temperature data to default topic, command option by default is set to:

["--temperature", "10:30"]

To publish temperature and humidity data together, change the command option to:

["--temperature", "10:30", "--humidity", "10:30", "--topic_humd", "temperature/simulated/0"]

To publish multiple sensor data(temperature, pressure, humidity) to default topic(temperature/simulated/0, pressure/simulated/0, humidity/simulated/0),change the command option to:

["--temperature", "10:30", "--pressure", "10:30", "--humidity", "10:30"]

To publish differnt topic instead of default topic, change the command option to:

["--temperature", "10:30", "--pressure", "10:30", "--humidity", "10:30", "--topic_temp", <temperature topic>, "--topic_pres", <pressure topic>, "--topic_humd", <humidity topic>]

It is possible to publish more than one sensor data into single topic, in that case, same topic name needs to be given for that sensor data.

For publishing data from csv row by row, change the command option to:

["--csv", "demo_datafile.csv", "--sampling_rate", "10", "--subsample", "1"]

To publish JSON files (to test random forest UDF), change the command option to:

["--topic", "test/rfc_data", "--json", "./json_files/*.json", "--streams", "1"]

If one wish to see the messages going over MQTT, run the subscriber with the following command:

$ ./subscriber.sh <port>

Example: If Broker runs at port 1883, to run subscriber use following command:

$ ./subscriber.sh 1883

Contents

Software Trigger Utility for VideoIngestion Module¶

This utility is used for invoking various software trigger features of VideoIngestion. The currently supported triggers to VideoIngestion module are:

START INGESTION - to start the ingestor

STOP_INGESTION - to stop the ingestor

SNAPSHOT - to get frame snapshot which feeds one only frame into the video data pipeline.

Software Trigger Utilily pre-requisites¶

SWTriggerUtility expects a set of config, interfaces & public private keys to be present in ETCD as a pre-requisite.

To achieve this, please ensure an entry for SWTriggerUtility with its relative path from IEdgeInsights directory is set in any of the .yml files present in IEdgeInsights directory.

An example has been provided below to add the entry in video-streaming.yml .. code-block:: yml

AppContexts: —snip— - tools/SWTriggerUtility

Configuration file:¶

config.json is the configuration file used for sw_trigger_utility.

Field |

Meaning |

Type of the value |

|---|---|---|

|

|

|

|

|

|

|

|

|

Note

:

When working with GigE cameras which requires

network_mode: host, update theEndPointkey of SWTriggerUtility interface in config.json to have the host system IP instead of the service name of the server.

Eg. In order to connect to `ia_video_ingestion` service which is configured with GigE camera refer the below `EndPoint` change in the SWTriggerUtility interface:

```javascript

{

"Clients": [

{

"EndPoint": "<HOST_SYSTEM_IP>:64013",

"Name": "default",

"ServerAppName": "VideoIngestion",

"Type": "zmq_tcp"

}

]

}

```

In case one needs to change the values in pre-requisites section, then ensure to re-run the steps mentioned in pre-requisites section to see the updated changes are getting applied

ORone can choose to update the config key of SWTriggerUtility app via ETCD UI and then restart the application.

This utility works in both dev & prod mode. As a pre-requisite make sure to turn ON the flag corresponding to “dev_mode” to true/false in the config.json file.

Running Software Trigger Utility¶

EII services can be running in prod or dev mode by setting

DEV_MODEvalue accordingly in build/.envExecute builder.py script:

$ cd [WORKDIR]/IEdgeInsights/build/ $ python3 builder.py -f usecases/video-streaming.yml

NOTE: The same yml file to which the SWTriggerUtility entry was added in pre-requisites has to be selected while running pre-requisites

Run provisioning step as below:

$ cd [WORKDIR]/IEdgeInsights/build/provision $ sudo -E ./provision.sh ../docker-compose.yml

## Usage of Software Trigger Utility:

By default the SW Trigger Utility container will not execute anything and one needs to interact with the running container to generate the trigger commands. Make sure VI service is up and ready to process the commands from the utility.

Software trigger utility can be used in following ways:

1: “START INGESTION” -> “Allows ingestion for default time (120 seconds being default)” -> “STOP INGESTION”

```sh

$ cd [WORKDIR]/IEdgeInsights/build

$ docker exec ia_sw_trigger_utility ./sw_trigger_utility

```

2: “START INGESTION” -> “Allows ingestion for user defined time (configurable time in seconds)” -> “STOP INGESTION”

```sh

$ cd [WORKDIR]/IEdgeInsights/build

$ docker exec ia_sw_trigger_utility ./sw_trigger_utility 300

```

**Note**: In the above example, VideoIngestion starts then does ingestion for 300 seconds then stops ingestion after 300 seconds & cycle repeats for number of cycles configured in the config.json.

3: Selectively send START_INGESTION software trigger:

```sh

$ cd [WORKDIR]/IEdgeInsights/build

$ docker exec ia_sw_trigger_utility ./sw_trigger_utility START_INGESTION

```

4: Selectively send STOP_INGESTION software trigger:

```sh

$ cd [WORKDIR]/IEdgeInsights/build

$ docker exec ia_sw_trigger_utility ./sw_trigger_utility STOP_INGESTION

```

5: Selectively send SNAPSHOT software trigger:

```sh

$ cd [WORKDIR]/IEdgeInsights/build

$ docker exec ia_sw_trigger_utility ./sw_trigger_utility SNAPSHOT

```

Note

:

If duplicate START_INGESTION or STOP_INGESTION sw_triggers are sent by client by mistake then the VI is capable of catching these duplicates & responds back to client conveying that duplicate triggers were sent & requets to send proper sw_triggers.

In order to send SNAPSHOT trigger, ensure that the ingestion is stopped. In case START_INGESTION trigger is sent previously then stop the ingestion using the STOP_INGESTION trigger.

Contents

EII TimeSeriesProfiler¶

This module calculates the SPS(samples per second) of any EII time-series modules based on the stream published by that respective module.

This module calculates the average e2e time for every sample data to process and it’s breakup. The e2e time end to end time required for a metric from mqtt-publisher to TimeSeriesProfiler (mqtt-publisher->telegraf->influx->kapacitor->influx->influxdbconnector-> TimeSeriesProfiler)

EII pre-requisites¶

- TimeSeriesProfiler expects a set of config, interfaces & public private keys to be present in ETCD as a pre-requisite.

To achieve this, please ensure an entry for TimeSeriesProfiler with its relative path from IEdgeInsights directory is set in the time-series.yml file present in IEdgeInsights directory. An example has been provided below:

AppContexts: - Grafana - InfluxDBConnector - Kapacitor - Telegraf - tools/TimeSeriesProfiler

With the above pre-requisite done, please run the below command:

python3 builder.py -f ./usecases/time-series.yml

EII TimeSeriesProfiler modes¶

By default the EII TimeSeriesProfiler supports two modes, which are 'sps' & 'monitor' mode.

SPS mode

Enabled by setting the ‘mode’ key in config to ‘sps’, this mode calculates the samples per second of any EII module by subscribing to that module’s respective stream.

"mode": "sps"

Monitor mode

Enabled by setting the ‘mode’ key in config to ‘monitor’, this mode calculates average & per sample stats

Refer the below exmaple config where TimeSeriesProfiler is used in monitor mode.

"config": { "mode": "monitor", "monitor_mode_settings": { "display_metadata": false, "per_sample_stats":false, "avg_stats": true }, "total_number_of_samples" : 5, "export_to_csv" : false }

"mode": "monitor" The stats to be displayed by the tool in monitor_mode can be set in the monitor_mode_settings key of `config.json <https://github.com/open-edge-insights/eii-tools/blob/master/TimeSeriesProfiler/config.json>`_.

‘display_metadata’: Displays the raw meta-data with timestamps associated with every sample.

‘per_sample_stats’: Continously displays the per sample metrics of every sample.

‘avg_stats’: Continously displays the average metrics of every sample.

EII TimeSeriesProfiler configurations¶

total_number_of_samples

If mode is set to ‘sps’, the average SPS is calculated for the number of samples set by this variable. If mode is set to ‘monitor’, the average stats is calculated for the number of samples set by this variable. Setting it to (-1) will run the profiler forever unless terminated by stopping container TimeSeriesProfiler manually. total_number_of_samples should never be set as (-1) for ‘sps’ mode.

export_to_csv

Setting this switch to true exports csv files for the results obtained in TimeSeriesProfiler. For monitor_mode, runtime stats printed in the csv are based on the the following precdence: avg_stats, per_sample_stats, display_metadata.

Running TimeSeriesProfiler¶

Pre-requisite :

Profiling UDF returns “ts_kapacitor_udf_entry” and “ts_kapacitor_udf_exit” timestamp.

These 2 as examples to refer:

Additional: Adding timestamps in ingestion and UDFs:

In case user wants to enable his/her own ingestion and UDFs, timestamps need to be added to ingestion and UDFs modules respectively. The TS Profiler needs three timestamps.

“ts” timestamp which is to be filled by the ingestor (done by the mqtt-publisher app).

The udf to give “ts_kapacitor_udf_entry” and “ts_kapacitor_udf_exit” timestamps to profile the udf execution time.

ts_kapacitor_udf_entry : timestamp in UDF before execution of the of the algorithm

ts_kapacitor_udf_exit : timestamp in UDF after execution of the algorithm.

The sample profiling UDFs can be referred at profiling_udf.go and rfc_classifier.py.

configuration required to run profiling_udf.go as profiling udf

In **Kapacitor config.json** , update “task” key as below:

"task": [{ "tick_script": "profiling_udf.tick", "task_name": "profiling_udf", "udfs": [{ "type": "go", "name": "profiling_udf" }] }] In **\ `kapacitor.conf <https://github.com/open-edge-insights/ts-kapacitor/blob/master/config/kapacitor.conf>`_\ ** under udf section:[udf.functions] [udf.functions.profiling_udf] socket = "/tmp/profiling_udf" timeout = "20s"

configuration required to run rfc_classifier.py as profiler udf In **Kapacitor config.json** , update “task” key as below:

"task": [{ { "tick_script": "rfc_task.tick", "task_name": "random_forest_sample" } }]

In **kapacitor.conf** under udf section:

[udf.functions.rfc] prog = "python3.7" args = ["-u", "/EII/udfs/rfc_classifier.py"] timeout = "60s" [udf.functions.rfc.env] PYTHONPATH = "/EII/go/src/github.com/influxdata/kapacitor/udf/agent/py/"

keep **config.json** file as following:

{ "config": { "total_number_of_samples": 10, "export_to_csv": "False" }, "interfaces": { "Subscribers": [ { "Name": "default", "Type": "zmq_tcp", "EndPoint": "ia_influxdbconnector:65032", "PublisherAppName": "InfluxDBConnector", "Topics": [ "rfc_results" ] } ] } }

In .env:

Set the profiling mode as true.

Set environment variables accordingly in config.json

Set the required output stream/streams and appropriate stream config in config.json file.

To run this tool in IPC mode, User needs to modify subscribers interface section of **config.json** as following

{

"type": "zmq_ipc",

"EndPoint": "/EII/sockets"

}

Refer README.md to provision, build and run the tool along with the EII time-series recipe/stack.

Run the following command to see the logs:

docker logs -f ia_timeseries_profiler

Contents

EII Video Profiler¶

This tool can be used to determine the complete metrics involved in the entire Video pipeline by measuring the time difference between every component of the pipeline and checking for Queue blockages at every component thereby determining the fast or slow components of the whole pipeline. It can also be used to calculate the FPS of any EII modules based on the stream published by that respective module.

EII Video Profiler pre-requisites¶

- VideoProfiler expects a set of config, interfaces & public private keys to be present in ETCD as a pre-requisite.

To achieve this, please ensure an entry for VideoProfiler with its relative path from IEdgeInsights directory is set in any of the .yml files present in IEdgeInsights directory. An example has been provided below:

AppContexts: - VideoIngestion - VideoAnalytics - tools/VideoProfiler

With the above pre-requisite done, please run the below to command:

python3 builder.py -f usecases/video-streaming.yml

EII Video Profiler modes¶

By default the EII Video Profiler supports two modes, which are 'fps' & 'monitor' mode.

FPS mode

Enabled by setting the ‘mode’ key in config to ‘fps’, this mode calculates the frames per second of any EII module by subscribing to that module’s respective stream.

"mode": "fps"

Note:

For running Video Profiler in FPS mode, it is recommended to keep PROFILING_MODE set to false in .env for better performance.

Monitor mode

Enabled by setting the ‘mode’ key in config to ‘monitor’, this mode calculates average & per frame stats for every frame while identifying if the frame was blocked at any queue of any module across the video pipeline thereby determining the fastest/slowest components in the pipeline. To be performant in profiling scenarios, VideoProfiler is enabled to work when subscribing only to a single topic in monitor mode.

User must ensure that

ingestion_appnameandanalytics_appnamefields of themonitor_mode_settingsneed to be set accordingly for monitor mode.Refer the below exmaple config where VideoProfiler is used in monitor mode to subscribe PySafetyGearAnalytics CustomUDF results.

"config": { "mode": "monitor", "monitor_mode_settings": { "ingestion_appname": "PySafetyGearIngestion", "analytics_appname": "PySafetyGearAnalytics", "display_metadata": false, "per_frame_stats":false, "avg_stats": true }, "total_number_of_frames" : 5, "export_to_csv" : false }

"mode": "monitor" The stats to be displayed by the tool in monitor_mode can be set in the monitor_mode_settings key of `config.json <https://github.com/open-edge-insights/eii-tools/blob/master/VideoProfiler/config.json>`_.

‘display_metadata’: Displays the raw meta-data with timestamps associated with every frame.

#. ‘per_frame_stats’: Continously displays the per frame metrics of every frame. #.

‘avg_stats’: Continously displays the average metrics of every frame.

Note:

Pre-requisite for running in profiling or monitor mode: VI/VA should be running with PROFILING_MODE set to true in .env

It is mandatory to have a udf for running in monitor mode. For instance GVASafetyGearIngestion does not have any udf(since it uses GVA elements) so it will not be supported in monitor mode. The workaround to use GVASafetyGearIngestion in monitor mode is to add dummy-udf in GVASafetyGearIngestion-config.

EII Video Profiler configurations¶

dev_mode

total_number_of_frames

If mode is set to ‘fps’, the average FPS is calculated for the number of frames set by this variable. If mode is set to ‘monitor’, the average stats is calculated for the number of frames set by this variable. Setting it to (-1) will run the profiler forever unless terminated by signal interrupts(‘Ctrl+C’). total_number_of_frames should never be set as (-1) for ‘fps’ mode.

export_to_csv

Setting this switch to true exports csv files for the results obtained in VideoProfiler. For monitor_mode, runtime stats printed in the csv are based on the the following precdence: avg_stats, per_frame_stats, display_metadata.

Running Video Profiler¶

Set environment variables accordingly in config.json.

Set the required output stream/streams and appropriate stream config in config.json file.

If VideoProfiler is subscribing to multiple streams, ensure the AppName of VideoProfiler is added in the Clients list of all the publishers.

If using Video Profiler in IPC mode, make sure to set required permissions to socket file created in SOCKET_DIR in build/.env.

sudo chmod -R 777 /opt/intel/eii/sockets **Note:**This step is required everytime publisher is restarted in IPC mode.

Caution: This step will make the streams insecure. Please do not do it on a production machine.

Refer the below VideoProfiler interface example to subscribe to PyMultiClassificationIngestion CutomUDF results in fps mode.

"/VideoProfiler/interfaces": { "Subscribers": [ { "EndPoint": "/EII/sockets", "Name": "default", "PublisherAppName": "PyMultiClassificationIngestion", "Topics": [ "py_multi_calssification_results_stream" ], "Type": "zmq_ipc" } ] },

If running VideoProfiler with helm usecase or trying to subscribe to any external publishers outside the eii network, please ensure the correct IP of publisher has been provided in the interfaces section in config and correct ETCD host & port are set in environment for ETCD_ENDPOINT & ETCD_HOST.

For example, for helm use case, since the ETCD_HOST and ETCD_PORT are different, run the commands mentioned below wit the required HOST IP: .. code-block:: sh

export ETCD_HOST=”<HOST IP>” export ETCD_ENDPOINT=”<HOST IP>:32379”

Refer provision/README.md to provision, build and run the tool along with the EII video-streaming recipe/stack.

Run the following command to see the logs:

docker logs -f ia_video_profiler

The runtime stats of Video Profiler if enabled with export_to_csv switch can be found at video_profiler_runtime_stats

Note:

poll_intervaloption in the VideoIngestion config sets the delay(in seconds)to be induced after every consecutive frame is read by the opencv ingestor. Not setting it will ingest frames without any delay.

ZMQ_RECV_HWMoption shall set the high water mark for inbound messages on the subscriber socket.The high water is a hard limit on the maximum number of outstanding messages ZeroMQ shall queue in memory for any single peer that the specified socket is communicating with. If this limit has been reached, the socket shall enter an exeptional state and drop incoming messages.

- In case of running Video Profiler for GVA use case we do not display the stats of the algo running with GVA since no

UDFs are used.

- The rate at which the UDFs process the frames can be measured using the FPS UDF and ingestion rate can be monitored accordingly.

In case multiple UDFs are used, the FPS UDF is required to be added as the last UDF.

In case running this tool with VI & VA in two different nodes, same time needs to be set in both the nodes.

Optimizing EII Video pipeline by analysing Video Profiler results¶

VI ingestor/UDF input queue is blocked, consider reducing ingestion rate.

If this log is displayed by the Video Profiler tool, it indicates that the ingestion rate is too high or the VideoIngestion UDFs are slow and causing latency throughout the pipeline. As per the log suggests, the user can increase the poll_interval to a optimum value to reduce the blockage of VideoIngestion ingestor queue thereby optimizing the video pipeline in case using the opencv ingestor. In case Gstreamer ingestor is used, the videorate option can be optimized by following the README.

VA subs/UDF input queue is blocked, consider reducing ZMQ_RECV_HWM value or reducing ingestion rate.

If this log is displayed by the Video Profiler tool, it indicates that the VideoAnalytics UDFs are slow and causing latency throughout the pipeline. As per the log suggests, the user can consider reducing ZMQ_RECV_HWM to an optimum value to free the VideoAnalytics UDF input/subscriber queue by dropping incoming frames or reducing the ingestion rate to a required value.

UDF VI output queue blocked.

If this log is displayed by the Video Profiler tool, it indicates that the VI to VA messagebus transfer is delayed.

User can consider reducing the ingestion rate to a required value.

User can increase ZMQ_RECV_HWM to an optimum value so as to not drop the frames when the queue is full or switching to IPC mode of communication.

UDF VA output queue blocked.

If this log is displayed by the Video Profiler tool, it indicates that the VA to VideoProfiler messagebus transfer is delayed.

User can consider reducing the ingestion rate to a required value.

User can increase ZMQ_RECV_HWM to an optimum value so as to not drop the frames when the queue is full or switching to IPC mode of communication.

Benchmarking with multi instance config¶

EII supports multi instance config generation for benchmarking purposes. This can be acheived by running the builder.py with certain parameters, please refer to the Multi instance config generation section of EII Pre-Requisites in README for more details.

For running VideoProfiler for multiple streams, run the builder with the -v flag provided the pre-requisites mentioned above are done. Given below is an example for generating 6 streams config:

python3 builder.py -f usecases/video-streaming.yml -v 6Note:

For multi instance monitor mode usecase, please ensure only VideoIngestion & VideoAnalytics are used as AppName for Publishers.

Running VideoProfiler with CustomUDFs for monitor mode is supported for single stream only. If required for multiple streams, please ensure VideoIngestion & VideoAnalytics are used as AppName.